Calculating Z-Scores In R: Comprehensive Guide For Standardization

In R, to compute z-scores, you can use the ‘scale()’ function, which standardizes data by subtracting the mean and dividing by the standard deviation: z = scale(x). Alternatively, you can use (x - mean(x)) / sd(x) to calculate the z-score manually. These approaches yield z-scores that represent the distance of individual data points from the mean in units of standard deviation.

Z-Score: Understanding the Concept of Standardization

In the realm of statistics, the z-score stands as a crucial tool for transforming data into a standardized format. Embark on a journey to unravel the concept of z-score, its intricate relationship with the normal distribution, and its significance in the world of data analysis.

The z-score, also known as the standard score, is a statistical measure that quantifies how far a particular data point deviates from the mean of a distribution. It provides a way to compare values from different datasets on a common scale, eliminating the limitations posed by varying units and scales.

The foundation of z-score lies in the normal distribution, a bell-shaped curve that describes the distribution of many natural and social phenomena. By transforming raw data into z-scores, we effectively align them with this universal distribution, making comparisons and inferences more meaningful.

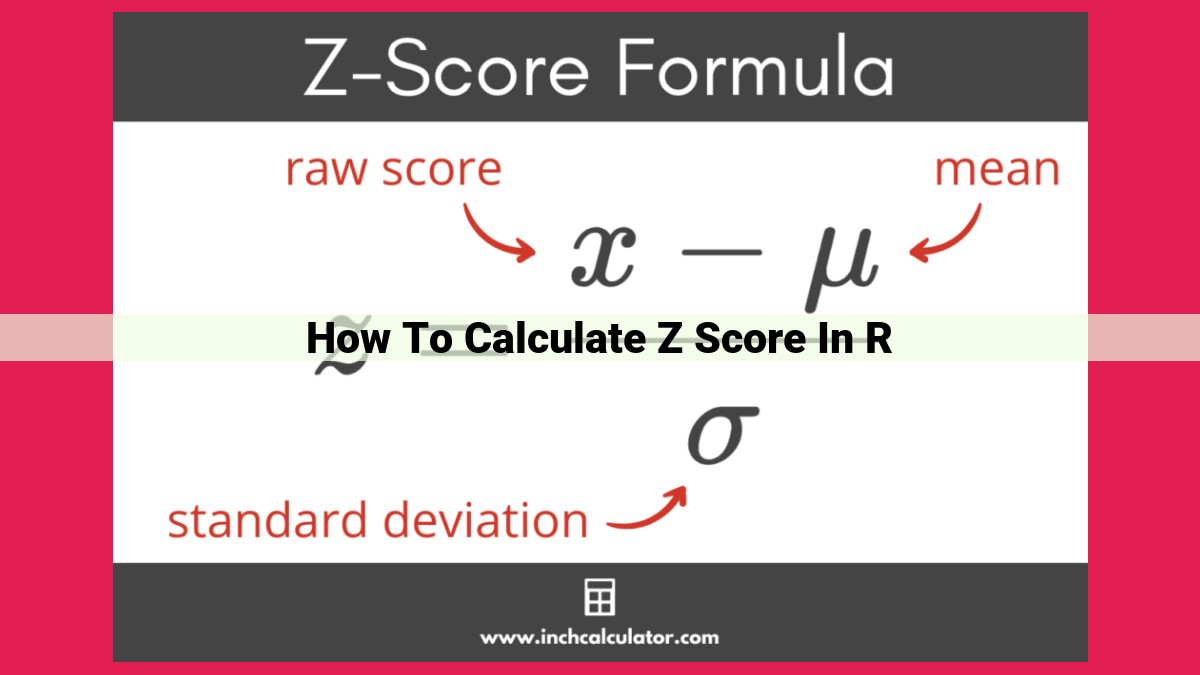

The Formula: Unveiling the Secrets of Z-Score Calculation

The formula for calculating z-score is elegantly simple:

z = (x - μ) / σ

where:

- z: z-score

- x: the raw data point

- μ: mean of the distribution

- σ: standard deviation of the distribution

Significance of Mean and Standard Deviation: The Pillars of Standardization

The mean (average) represents the central point of a distribution, while the standard deviation (spread) quantifies the dispersion of data points around the mean. The z-score effectively captures the distance between a data point and the mean, relative to the spread of the distribution.

Applications of Z-Score: Empowering Data-Driven Insights

Z-scores find widespread applications in various fields, including:

- Hypothesis Testing: Comparing sample statistics to population values

- Statistical Inference: Making predictions and drawing conclusions from data

- Data Analysis: Identifying outliers, patterns, and relationships within datasets

- Standardization: Rescaling data to facilitate comparisons and transformations

Limitations and Alternatives: Navigating the Z-Score Landscape

While z-score is a powerful tool, it is important to note its limitations. Assumptions of normality and the presence of outliers can impact the validity of z-score calculations. In such cases, alternative statistical measures, such as the t-score and Student’s t-distribution, offer more robust options.

Formula for Calculating Z-Score: Unveiling the Secrets of Statistical Significance

In the realm of statistics, the z-score stands tall as a crucial tool for understanding how a data point deviates from the average. This deviation is measured in terms of standard deviations—a statistical yardstick that quantifies the typical spread of data. The z-score formula captures this deviation in a single number, providing a standardized reference point for comparing data points across different sets.

At its core, the z-score formula is a simple calculation that employs two key parameters: the mean and standard deviation of the data set. The mean represents the central tendency or average value, while the standard deviation measures the variability or spread of the data.

The formula for calculating the z-score is:

z-score = (x - μ) / σ

where:

- x represents the individual data point

- μ (mu) represents the mean of the data set

- σ (sigma) represents the standard deviation of the data set

In essence, the z-score formula subtracts the mean from the individual data point and divides the result by the standard deviation. This process standardizes the data point, allowing for direct comparisons between data points from different distributions with varying means and standard deviations.

By transforming data into z-scores, researchers can unlock a powerful statistical tool. Z-scores provide a common scale that enables them to assess the relative position of a data point within a distribution, determine the probability of observing a given value, and conduct hypothesis testing to determine statistical significance.

Calculating Z-Scores in R: A Concise Guide

In the realm of data exploration and analysis, understanding Z-scores is crucial. Z-scores, also known as standard scores, provide a valuable tool for standardizing and comparing data points from different distributions. This blog post will embark on a journey to unravel the intricacies of calculating Z-scores in R, a powerful statistical programming language.

Delving into the Z-Score Formula

At the heart of Z-score calculation lies a simple yet profound formula:

Z = (X - μ) / σ

where:

- X represents the data point being evaluated

- μ is the mean (average) of the data

- σ denotes the standard deviation, a measure of variability

Navigating R for Z-Score Calculation

R offers an array of functions that empower us to delve into data manipulation and Z-score calculation. Let’s explore these functions in a step-by-step manner:

-

Data Importation: Begin by importing your data into an R dataframe using the

read.csv()function. -

Mean and Standard Deviation Calculation: Utilize the

mean()andsd()functions to calculate the mean and standard deviation of your dataset. -

Z-Score Computation: Employ the

scale()function to calculate Z-scores for each data point. It subtracts the mean and divides by the standard deviation in one convenient step.

An Illustrative Example in R

To solidify our understanding, let’s work through a practical example:

# Import data

data <- read.csv("sample_data.csv")

# Calculate mean and standard deviation

data_mean <- mean(data$value)

data_sd <- sd(data$value)

# Calculate Z-scores

data$z_score <- scale(data$value)

# Visualize Z-scores

ggplot(data, aes(x = data$value, y = data$z_score)) +

geom_point() +

geom_hline(yintercept = 0) +

labs(title = "Data Distribution with Z-Scores")

Embarking on the Journey of Z-Score Interpretation

Z-scores serve as a powerful tool for interpreting data. They provide insights into the data’s distribution and allow us to compare data points across different datasets.

- Positive Z-scores indicate values above the mean, while negative Z-scores represent values below the mean.

- The magnitude of the Z-score indicates the distance from the mean in terms of standard deviations.

- Z-scores empower us to calculate the probability of a data point occurring in a given distribution.

Standardization Unveiled with Z-Scores

Z-scores play a pivotal role in standardization, a process of transforming data into a common scale. This facilitates comparisons between variables measured on different scales and allows for the combination of data from multiple sources.

Example of Calculating Z-Score in R: A Step-by-Step Guide for Statistical Significance

Z-scores are essential statistical measures that provide a standardized way of comparing data points to a normal distribution. They allow us to determine how far a given data point deviates from the mean, expressed in terms of standard deviations.

Calculating Z-Score in R using Formula

To calculate the Z-score in R, we use the following formula:

z = (x - μ) / σ

where:

- x is the data point

- μ is the mean of the distribution

- σ is the standard deviation of the distribution

Step-by-Step Guide using R Functions

In R, we can calculate Z-scores using various functions. Here’s a step-by-step guide:

- Load the data into R using the read.csv() function.

- Calculate the mean using the mean() function.

- Calculate the standard deviation using the sd() function.

- Use the scale() function to calculate the Z-score using the formula. The scale() function subtracts the mean and divides by the standard deviation.

- Visualize the distribution of the data using histograms, boxplots, or density plots to understand the spread and potential outliers.

Example Code

# Load the data

data <- read.csv("data.csv")

# Calculate mean

mean <- mean(data$variable)

# Calculate standard deviation

sd <- sd(data$variable)

# Calculate Z-scores

data$z_score <- scale(data$variable)

# Visualize the distribution

ggplot(data, aes(x = z_score)) +

geom_histogram(bins = 20)

Interpretation

The Z-score indicates how many standard deviations a data point is from the mean. Positive Z-scores indicate values above the mean, while negative Z-scores indicate values below the mean. For a normal distribution, approximately 68% of the data points fall within one standard deviation of the mean, 95% within two standard deviations, and 99.7% within three standard deviations.

This example provides a practical understanding of Z-score calculation in R, enabling you to analyze data and draw statistical inferences with confidence.

Interpretation of Z-Score: Unlocking Statistical Significance and Probabilities

In the realm of statistics, the z-score plays a pivotal role in deciphering the meaning behind numerical data. It allows us to understand how far a specific data point deviates from the average value within a dataset, measured in terms of standard deviations.

The z-score is calculated by subtracting the mean of the dataset from the data point in question and dividing the result by the standard deviation. This simple formula transforms the data into a standardized scale, enabling us to compare values from different distributions with varying means and standard deviations.

The magnitude of the z-score indicates the statistical significance of the deviation. A z-score of 0 represents the mean, while positive and negative values indicate deviations above and below the mean, respectively. The larger the absolute value of the z-score, the more extreme the deviation is.

Moreover, the z-score can be used to calculate the probability of observing a specific value or a value more extreme in the dataset. This is achieved by using a standard normal distribution table or a statistical software package. The probability value (p-value) represents the likelihood of obtaining the observed z-score or a more extreme value, assuming the null hypothesis is true (i.e., there is no significant difference from the mean).

Understanding the statistical significance and probability associated with a z-score is crucial for drawing inferences from data. It helps researchers and practitioners evaluate the likelihood of observing extreme values, make informed decisions, and draw meaningful conclusions from their analyses.

Standardization Using Z-Score: Transforming Data for Statistical Analysis

In the realm of statistics, z-scores are powerful tools that allow us to standardize and transform data. This process enables us to compare data points across different datasets and distributions, opening up a world of possibilities for statistical analysis.

Imagine you have two datasets, one containing test scores from a class of students and the other representing sales figures from different regions. Comparing these datasets would be challenging due to their vastly different scales and distributions.

Enter the z-score. By calculating the z-score of each data point, we can transform the data into a standardized form, giving us a common ground for comparison. The z-score represents the number of standard deviations a data point is away from the mean of the distribution.

This standardization process allows us to draw meaningful comparisons between data points, regardless of their original units or distributions. For instance, a z-score of 1 indicates that a data point is one standard deviation above the mean, while a z-score of -2 signifies that it is two standard deviations below the mean.

By standardizing data using z-scores, we can:

- Compare data from different groups or distributions: This enables us to identify outliers, draw inferences, and make meaningful comparisons between seemingly disparate datasets.

- Pool data from different sources: By standardizing data to a common scale, we can combine datasets for more robust statistical analyses.

- Simplify statistical calculations: Many statistical tests and models require standardized data to ensure accurate and reliable results.

While z-scores are a valuable tool, it’s important to note that they are sensitive to outliers. Extreme values can significantly distort the mean and standard deviation, which in turn affects the z-scores. Therefore, it’s always advisable to inspect the data for outliers before calculating z-scores to ensure the validity of the results.

Applications of Z-Scores

In the realm of data analysis and statistical inference, Z-scores shine as a versatile tool with a multitude of applications. They serve as a powerful aid in hypothesis testing, statistical inference, and data analysis.

Hypothesis Testing:

Z-scores are integral in hypothesis testing, a process of evaluating the plausibility of a claim based on observed data. By comparing the Z-score of a sample to a threshold determined by the level of significance, researchers can determine if the observed difference is statistically significant or merely due to chance. This enables them to make informed decisions about the validity of their hypotheses.

Statistical Inference:

Z-scores provide a means to draw inferences about a population based on a sample. By calculating the Z-score of a sample mean and its standard deviation, researchers can estimate the confidence interval for the population mean. This interval gives a range within which the true population mean is likely to fall. It aids in making accurate generalizations about the population beyond the sample.

Data Analysis:

Z-scores are indispensable in data exploration and analysis. By standardizing data sets with different scales, Z-scores allow for meaningful comparisons between variables. This facilitates the identification of outliers, patterns, and relationships within the data, enabling researchers to gain deeper insights and make more informed decisions.

By leveraging the power of Z-scores, researchers can delve into the intricacies of their data, uncover hidden insights, and draw statistically sound conclusions. They serve as a cornerstone of statistical analysis, providing a solid foundation for evidence-based decision-making.

Limitations of Z-Score: Exploring the Boundaries of Normalcy

When delving into the world of statistical analysis, the z-score is an indispensable tool for understanding data distribution and identifying outliers. However, like any statistical measure, it has certain limitations that must be acknowledged to ensure accurate interpretations.

Assumptions of Normality: A Delicate Balance

One crucial limitation of the z-score is its reliance on the assumption of normality. In simpler terms, the data must follow a bell-shaped distribution. If the data deviates significantly from this pattern, the z-score calculations can become distorted. In such cases, the resulting z-scores may not accurately represent the true distribution of the data.

Outliers: The Unruly Guests at the Data Party

Outliers, those extreme values that stand out like sore thumbs, can also wreak havoc on z-score calculations. When outliers are present, they can skew the mean and standard deviation, which in turn affects the z-score values. Consequently, the z-scores may not accurately reflect the distribution of the majority of the data.

Alternatives to the Z-Score: Exploring Other Measures

In situations where the assumptions of normality or the presence of outliers pose challenges, alternative statistical measures come into play. The t-score, for instance, is a more robust alternative that is less sensitive to deviations from normality. Other measures like the median absolute deviation (MAD) or the interquartile range (IQR) can also be employed to handle non-normal or outlier-laden datasets.

While the z-score is a powerful analytical tool, it is essential to be mindful of its limitations. Assumptions of normality and the presence of outliers can impact the accuracy of z-score calculations. By understanding these limitations and considering alternative measures, statisticians can ensure that their data analyses are robust and insightful, regardless of the challenges their data may present.

Alternatives to Z-Score: Unveiling Other Statistical Measures

In the realm of statistics, the z-score reigns supreme as a benchmark for data standardization. However, when certain assumptions are not met or the data distribution deviates from the bell curve, statisticians seek alternatives to the z-score to ensure accurate and meaningful analysis.

T-Score: A Robust Alternative

One such alternative is the t-score, which is closely related to the z-score. Both measures represent how far a data point lies from the mean in units of standard deviation. However, the t-score is derived from the Student’s t-distribution, which differs from the normal distribution in its wider, flatter shape.

The key distinction is that the t-distribution is less sensitive to outliers and small sample sizes. Thus, the t-score is a more robust measure when the normality assumption of the z-score is questionable or when the sample size is relatively small.

Other Statistical Measures for Different Needs

Beyond the t-score, statisticians have developed a plethora of other statistical measures tailored to specific scenarios and data types. Here’s a brief overview:

-

Percentile Rank: Expresses the percentage of observations in a dataset below a particular data point.

-

Rank Transform: Converts raw data into a sequence of ranks, with each rank indicating the data point’s relative position within the dataset.

-

Standard Score: Similar to the z-score, the standard score standardizes data using the mean and standard deviation but has different scaling properties.

-

Median Absolute Deviation (MAD): A robust measure of variability that is less sensitive to outliers compared to standard deviation.

Choosing the Right Measure

Deciding which statistical measure to use depends on the nature of the data, the assumptions being made, and the specific research question at hand. For normally distributed data with large sample sizes, the z-score is a reliable choice. However, when normality or sample size is a concern, the t-score or other alternatives should be considered.

Statisticians rely on these alternative measures to ensure the accuracy and interpretability of their analyses, even when the assumptions underlying the z-score are not fully met. By carefully selecting the appropriate statistical measure, researchers can gain valuable insights and make informed decisions based on their data.