Understanding Z-Scores: Quantifying Data Deviations And Statistical Applications

A standardized test statistic, such as the z-score, quantifies how far a data point deviates from the mean in standard deviation units. To calculate it, find the mean and standard deviation of the data, subtract the mean from the data point, and divide the result by the standard deviation. Positive z-scores indicate the data point is above the mean, while negative scores indicate it’s below. Z-scores are used to compare data points, determine outliers, and conduct statistical tests and calculations, such as hypothesis testing and finding confidence intervals.

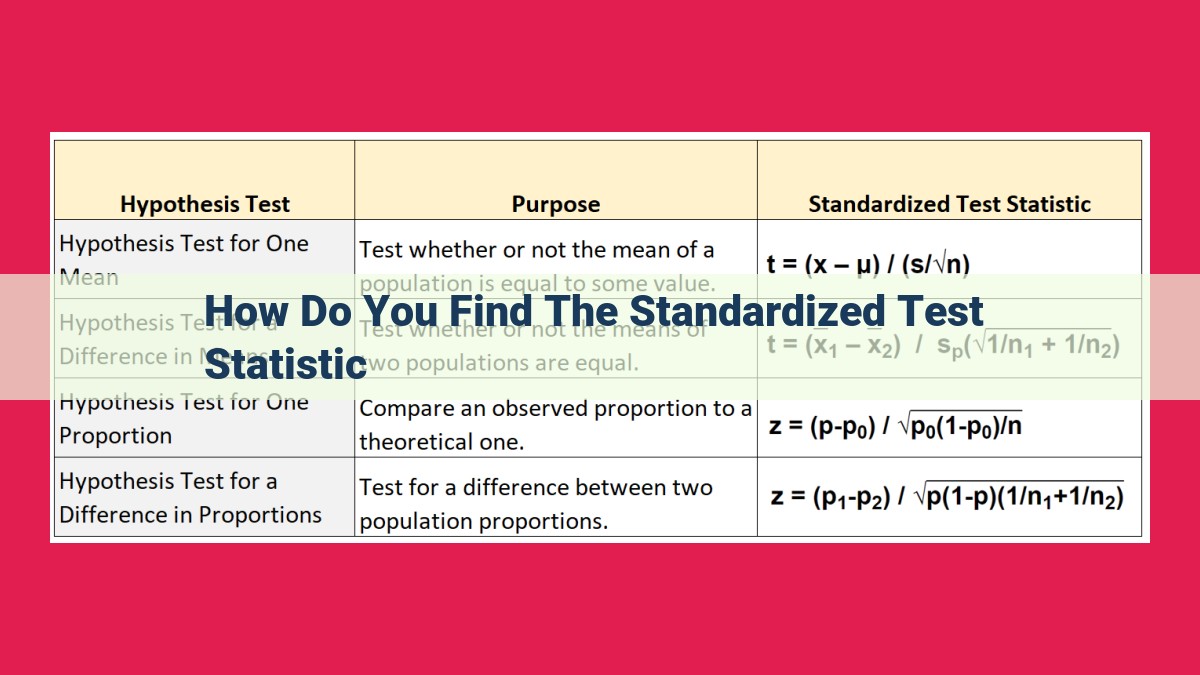

- Define the concept of standardized test statistic and its significance.

Standardized Test Statistics: The Key to Unlocking Meaning from Data

In the realm of statistics, where numbers dance and patterns unfold, the concept of a standardized test statistic emerges as a crucial tool for unlocking the significance of our data. It’s like a compass, guiding us through the labyrinth of numbers, revealing their hidden truths.

A standardized test statistic transforms a raw data point into a standardized value, allowing us to compare data from different sets or distributions, regardless of their units of measurement or scales. It’s like converting all our data points into the same currency, enabling us to judge their relative worth objectively. This standardization allows us to make informed decisions and draw meaningful conclusions from our data.

Standardized test statistics find wide application in various statistical techniques, such as:

- Hypothesis testing: Assessing the validity of a hypothesis by comparing the observed data to expected data under the assumption of the hypothesis being true.

- Confidence intervals: Estimating the range of values within which a population parameter (such as the mean) is likely to fall.

- Probability calculations: Determining the likelihood of an event occurring based on the distribution of the data.

Understanding Measures of Dispersion: Untangling the Spread of Data

Just like the diversity of life on Earth, data also comes in all shapes and sizes. Sometimes, it’s helpful to summarize this variety using measures of dispersion. These measures tell us how spread out the data is, allowing us to better understand the underlying patterns. The three most common measures of dispersion are:

Standard Deviation

Imagine a group of students taking a test. The standard deviation measures how much their scores deviate from the mean (average) score. A high standard deviation indicates that the scores are spread out widely, while a low standard deviation suggests that the scores are clustered closely around the mean.

Variance

The variance is simply the square of the standard deviation. It represents the average squared deviation from the mean. Like the standard deviation, a high variance indicates a wide spread of data, while a low variance indicates a narrow spread.

Mean

The mean is the most basic measure of central tendency. It’s simply the sum of all data points divided by the number of points. The mean provides a general idea of where the data is centered, but it doesn’t tell us how spread out it is. To determine the spread, we need to use standard deviation or variance.

Interplay of Mean and Dispersion

These three measures work together to paint a complete picture of the data. The mean gives us the central location, while the standard deviation (or variance) gives us an idea of how the data is distributed around the mean.

For example, if we have two datasets with the same mean, but one has a higher standard deviation, we know that the data in the second dataset is more spread out. Conversely, if the mean and standard deviation are both low, the data is tightly clustered around the central value.

Measures of dispersion are essential for understanding the spread of data. By combining the mean with the standard deviation or variance, we can gain a deeper insight into the characteristics of our data. This helps us make more informed decisions and draw meaningful conclusions from our analyses.

Unveiling the Secrets of Standardized Test Statistics: A Guide to Statistical Analysis

In the realm of statistics, understanding standardized test statistics is crucial. They serve as a powerful tool for interpreting data, making inferences, and drawing meaningful conclusions from vast amounts of information. This comprehensive guide will delve into the concept of standardized test statistics, exploring their significance and applications.

Understanding Measures of Dispersion

Data often exhibits variability, and measures of dispersion help us quantify this spread. Standard deviation and variance provide a numerical representation of how much data values deviate from their mean. A smaller standard deviation indicates a narrower spread of data, while a larger standard deviation suggests a wider distribution.

Sampling and Estimation: Unveiling Population Characteristics

To study a large population, researchers often rely on smaller samples that accurately represent the population. Sample mean offers a valuable estimate of the population mean. This concept is fundamental to statistical inference, allowing us to make generalizations about populations based on sample observations.

Delving into Statistical Distributions

Sampling distributions, such as the Student’s t-distribution and the z-distribution, play a critical role in statistical inference. The z-distribution is useful when the population standard deviation is known. Its formula, z = (x – μ) / σ, calculates a z-score that measures how many standard deviations a data point x lies from the mean μ.

Calculating Standardized Test Statistics: A Three-Step Process

Determining z-scores involves three simple steps:

- Determine mean and standard deviation: Calculate the mean and standard deviation of the data.

- Calculate difference from the mean: Subtract the mean from the data value (x – μ).

- Divide by the standard deviation: Divide the result by the standard deviation ((x – μ) / σ).

Interpreting Z-Scores: Uncovering Deviations from the Mean

Positive z-scores indicate that a data point lies above the mean, while negative z-scores show that it falls below the mean. The magnitude of the z-score represents how far the data point is from the mean in terms of standard deviation units. For instance, a z-score of 2 suggests that the data point is two standard deviations above the mean.

Applications of Z-Scores: Empowering Statistical Analysis

Z-scores find wide application in statistics:

- Hypothesis testing: Z-scores are used to determine whether observed data supports a hypothesized claim.

- Confidence intervals: Z-scores help construct confidence intervals that provide a range within which population parameters are likely to fall.

- Probability calculations: Z-scores enable the calculation of probabilities associated with specific data values in a distribution.

Standardized test statistics provide a powerful tool for analyzing data, making inferences, and drawing conclusions. Understanding their calculation and interpretation empowers researchers to unravel the mysteries of large datasets and gain valuable insights from seemingly complex information. By following the guidelines outlined in this comprehensive guide, you can effectively utilize standardized test statistics to enhance your statistical analysis and make informed decisions.

Understanding Standardized Test Statistics and Z-Scores

In statistics, standardized test statistics are crucial tools used to measure the significance of observed data. They help us compare different datasets, estimate population parameters, and make inferences about statistical hypotheses. Let’s delve into the fascinating world of standardized test statistics, with a particular focus on z-scores.

Measures of Dispersion:

To understand the concept of z-scores, we first need to understand measures of dispersion. These measures quantify how spread out or dispersed a dataset is. Commonly used measures of dispersion include:

- Standard deviation (σ): This measures how much data points deviate from the mean. A larger standard deviation indicates more dispersion.

- Variance (σ²): The square of the standard deviation, which represents the average of the squared differences from the mean.

- Mean (μ): The average of a dataset, which represents the central tendency.

Statistical Distributions:

Data can be distributed in various ways, and the Student’s t-distribution and z-distribution (or standard normal distribution) are two important probability distributions. The z-distribution is used when the population parameters (mean and standard deviation) are known.

Calculating the Z-Score:

A z-score, denoted by z, is a standardized test statistic that measures how many standard deviations a data point is away from the mean. The formula for calculating a z-score is:

z = (x - μ) / σ

where:

- x is the data point

- μ is the population mean

- σ is the population standard deviation

Interpretation of Z-Scores:

Positive z-scores indicate that a data point is above the mean, while negative z-scores indicate that it is below the mean. The absolute value of a z-score represents how far a data point is from the mean in terms of standard deviation units.

Applications of Z-Scores:

Z-scores have numerous applications in statistical analysis, including:

- Hypothesis testing

- Confidence intervals

- Probability calculations

For instance, in hypothesis testing, z-scores are used to determine the significance of a difference between a sample mean and a hypothesized population mean.

Standardized test statistics, particularly z-scores, are essential tools for making sense of data. By using these statistics, we can compare datasets, estimate population parameters, and draw informed conclusions. So, next time you encounter a z-score, remember its significance and the role it plays in unlocking the mysteries of statistical data.

Calculating the Standardized Test Statistic:

- Outline the three steps involved in calculating z-score:

- Determine mean and standard deviation

- Calculate difference from the mean

- Divide by the standard deviation

Calculating the Standardized Test Statistic: A Step-by-Step Guide

Understanding the concept of standardized test statistics is essential for statistical analysis. They allow us to compare data from different groups or populations using a common metric. Of these, the z-score, calculated using the Student’s t-distribution, is widely used. Here’s a simple three-step guide to help you calculate z-scores:

1. Determine Mean and Standard Deviation

The first step involves finding the mean (average) and standard deviation (a measure of data spread) of the dataset. The mean represents the central tendency of the data, while the standard deviation reflects its dispersion.

2. Calculate Difference from the Mean

Once you have the mean, calculate the difference between each data point (x) and the mean (μ). This difference, also known as the deviation score, indicates how far a particular data point is from the average.

3. Divide by the Standard Deviation

The final step is to divide the deviation score by the standard deviation (σ). This standardizes the data and allows for comparison across different datasets. The resulting value is the z-score, which represents the number of standard deviation units that a data point is away from the mean.

Example:

Suppose we have a dataset of test scores with a mean of 75 and a standard deviation of 10. To calculate the z-score for a score of 85, we follow the steps:

- Mean: μ = 75

- Deviation Score: x – μ = 85 – 75 = 10

- Z-Score: z = (x – μ) / σ = 10 / 10 = 1

This z-score of 1 indicates that the score of 85 is one standard deviation unit above the mean. By understanding and applying these steps, you can effectively calculate z-scores and use them to analyze your data.

Understanding Standardized Test Statistics and the Meaning of Z-Scores

In the realm of statistics, understanding concepts like standardized test statistics is crucial for analyzing data effectively. One such statistic is the z-score, which plays a vital role in quantifying how far a data point deviates from the mean of a distribution.

Interpreting Z-Scores:

Z-scores are calculated using the formula z = (x - μ) / σ, where:

xis the data pointμis the population meanσis the population standard deviation

Positive Z-Scores:

A positive z-score indicates that the data point is located above the mean. The magnitude of the z-score represents the number of standard deviations the data point is away from the mean. For instance, a z-score of 2 means that the data point is 2 standard deviations above the mean.

Negative Z-Scores:

On the other hand, a negative z-score signifies that the data point lies below the mean. Its magnitude denotes the number of standard deviations below the mean. A z-score of -1.5 implies that the data point is 1.5 standard deviations below the mean.

Distance from the Mean:

By providing this numerical value, z-scores allow us to compare data points across different distributions with varying means and standard deviations. They offer a standardized metric for measuring how far a particular data point is from the center of a distribution.

Applications in Statistics:

Z-scores find widespread application in statistical analysis, including:

- Hypothesis testing: Determining whether a sample mean differs significantly from the population mean

- Confidence intervals: Estimating the range within which the true population mean is likely to fall

- Probability calculations: Assessing the likelihood of observing a particular data point or a range of data points

By comprehending the interpretation of z-scores, we gain a deeper understanding of statistical distributions and the variation within data sets. This knowledge empowers us to make informed decisions based on statistical evidence.

Applications of Z-Scores: Exploring Their Role in Statistical Analysis

In the realm of statistics, z-scores emerge as indispensable tools for understanding data and drawing meaningful conclusions. They serve as standardized measurements that allow us to compare data points from different distributions and gauge their relative distance from the mean.

One pivotal application of z-scores lies in hypothesis testing. Here, we compare the observed z-score to a critical value derived from the expected distribution. If the z-score falls outside the critical region, it suggests that the null hypothesis is unlikely to be true. This process empowers researchers to make informed decisions about the validity of their claims.

Z-scores also play a crucial role in constructing confidence intervals. These intervals provide a range of plausible values for a population parameter, such as the mean. By calculating the z-score associated with a given confidence level, we can determine the boundaries of the interval and estimate the true parameter with a defined level of certainty.

Moreover, z-scores are employed in probability calculations. By standardizing data, we can utilize the standard normal distribution, which provides a known probability distribution for z-scores. This enables us to determine the likelihood of observing a particular data point or event under specific conditions.

In essence, z-scores serve as a versatile tool for analyzing data, testing hypotheses, constructing confidence intervals, and calculating probabilities. They allow us to delve deeper into the intricacies of our data and draw informed conclusions from statistical observations.