Quantifying Similarity: Mathematical Functions For Data Analysis And Information Retrieval

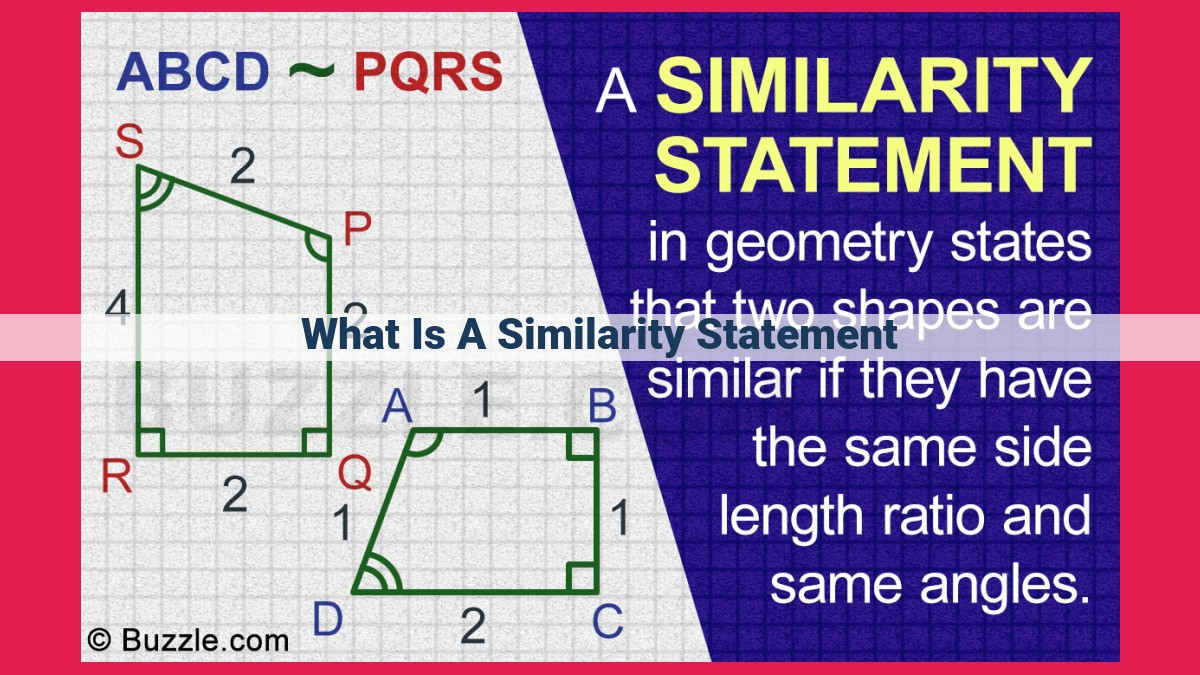

A similarity statement is a mathematical function that quantifies the likeness between two objects. It plays a crucial role in fields like data mining, machine learning, and information retrieval, enabling tasks such as classification, clustering, and recommendation systems. Similarity metrics, including cosine similarity, Jaccard similarity, Levenshtein distance, and Hamming distance, are used to measure the similarity between vectors, sets, strings, and binary strings respectively. Distance metrics, closely related to similarity metrics, measure dissimilarity. Selecting the appropriate similarity metric is essential for specific applications, ensuring effective task performance.

Understanding Similarity Statements: The Foundation of Intelligent Systems

In the realm of computers and artificial intelligence, systems increasingly rely on similarity statements to make sense of the world. These mathematical tools quantify the resemblance between objects, enabling computers to perform tasks like object recognition, language processing, and recommendation systems.

Similarity is an essential concept in countless fields, from biology (comparing DNA sequences) to social sciences (studying similarities in behavior). It plays a pivotal role in helping machines understand, categorize, and interact with their surroundings.

Measuring similarity is crucial for many applications, such as:

- Classification: Assigning objects to predefined categories based on their characteristics. For example, recognizing an image as a cat or dog.

- Recommendation Systems: Personalizing experiences by suggesting items (e.g., movies, products) similar to ones a user has enjoyed.

- Natural Language Processing: Understanding the meaning of text, extracting information, and translating languages.

- Fraud Detection: Identifying suspicious transactions based on similarity to known fraudulent patterns.

- Image Processing: Matching and comparing images for object detection and recognition.

Similarity Metrics: The Cornerstone of Measuring Similarities

In the vast world of data, we often encounter the need to determine how similar two or more objects are. This is where similarity metrics come into play, acting as indispensable tools for quantifying the degree of likeness between objects.

Defining Similarity Metrics

Similarity metrics are mathematical functions that measure the level of resemblance between objects. These objects can be anything from documents and images to sets and strings. The output of a similarity metric is a numerical value, typically ranging from 0 (no similarity) to 1 (perfect similarity).

Types of Similarity Metrics

Various similarity metrics have been developed, each suited to different types of data and applications. Here are some of the most common:

- Cosine Similarity: Measures the angle between two vectors, commonly used to compare documents or images.

- Jaccard Similarity: Calculates the overlap between two sets, often employed in text analysis and bioinformatics.

- Levenshtein Distance: Quantifies the number of edits (insertions, deletions, substitutions) required to transform one string into another, widely used for error detection and spell checking.

- Hamming Distance: Computes the number of bit mismatches between two binary strings, finding applications in digital communications and error detection.

Choosing the Right Metric

The choice of similarity metric depends on the specific application and the nature of the data being compared. Consider the following factors:

- The type of data (e.g., vectors, sets, strings)

- The purpose of the comparison (e.g., ranking, clustering, anomaly detection)

- The desired interpretation of the similarity score

By carefully selecting the appropriate similarity metric, you can ensure accurate and meaningful comparisons between objects, unlocking valuable insights from your data.

Cosine Similarity: Measuring Similarity in Vectors

Imagine you’re comparing two documents to determine how similar their content is. How do you quantify that similarity? This is where cosine similarity comes into play.

Defining Cosine Similarity

Cosine similarity is a mathematical measure that quantifies the likeness between two vectors. It is calculated as the cosine of the angle between the two vectors. Vectors are simply multi-dimensional arrays of numbers that represent the characteristics of an object. For example, a document can be represented as a vector where each element represents the frequency of a word in the document.

How Cosine Similarity Works

The cosine similarity ranges from -1 to +1. A value of -1 indicates complete dissimilarity, while 1 represents perfect similarity. To calculate cosine similarity, we first normalize the two vectors by dividing each element by the vector’s magnitude. The magnitude is a measure of the vector’s length.

After normalization, we compute the dot product of the two vectors. The dot product is a measure of how closely the vectors align with each other. The final step is to divide the dot product by the product of the magnitudes of the two vectors. This gives us the cosine similarity.

Applications

Cosine similarity has wide applications in various domains, including:

- Document Clustering: Cosine similarity is used to group similar documents together. This is crucial for search engines and information retrieval systems.

- Image Comparison: By representing images as vectors, cosine similarity can be used to compare their visual content. This is useful for image search and object recognition.

- Vector Analysis: Cosine similarity can be applied to any type of vector data. It is used in machine learning, natural language processing, and other fields to measure the similarity between different representations.

Cosine similarity is a fundamental concept in the field of similarity measurement. Its ability to quantify the likeness between vectors has made it an invaluable tool in various applications. By understanding how cosine similarity works, you can leverage it effectively to analyze data and solve problems that require the measurement of similarity.

Unveiling Jaccard Similarity: The Powerhouse for Set and Text Overlap

In the realm of data analysis, where understanding similarities and differences is crucial, the Jaccard similarity stands as a beacon of clarity. This ingenious metric, named after the Swiss botanist Paul Jaccard, goes beyond mere comparison; it quantifies the overlap between two sets, providing valuable insights into data relationships.

At its core, the Jaccard similarity is a fraction that measures the proportion of common elements between two sets. The calculation is straightforward: simply divide the intersection of the sets by their union. Let’s imagine two sets, A and B:

Set A: {apple, banana, cherry}

Set B: {banana, kiwi, pineapple}

The intersection of A and B is the set containing common elements: {banana}. The union of A and B is the set containing all elements in both sets: {apple, banana, cherry, kiwi, pineapple}. Plugging these values into the formula, we get:

Jaccard Similarity = Intersection(A, B) / Union(A, B)

= {banana} / {apple, banana, cherry, kiwi, pineapple}

= 1 / 5

= 0.2

This result indicates that 20% of the elements in sets A and B are common.

The beauty of the Jaccard similarity lies in its versatility. It can measure set similarity in various contexts, such as comparing sets of genes, documents, or even images. But it doesn’t stop there. Jaccard similarity is also widely used in natural language processing (NLP) to measure text overlap.

In the realm of NLP, the Jaccard similarity is employed to quantify the similarity of word sets extracted from text documents. This information is invaluable for tasks such as:

- Document clustering: Grouping similar documents together for efficient information retrieval.

- Text classification: Identifying the category or topic to which a document belongs.

- Duplicate content detection: Flagging documents that contain a high degree of overlap.

The Jaccard similarity is a powerful tool that empowers data analysts with the ability to discern similarities between sets and text. Its versatility and simplicity make it an indispensable weapon in the arsenal of data exploration.

Levenshtein Distance: Identifying String Similarity with Precision

In the realm of data, accuracy is paramount. Whether it’s processing text or analyzing genetic sequences, detecting errors and ensuring precision is crucial. This is where Levenshtein distance, a powerful similarity metric, steps in.

Understanding Levenshtein Distance

Imagine you’re writing an email and accidentally type “teh” instead of “the.” Levenshtein distance would tell you the similarity between these two strings by calculating the minimum number of edits (insertions, deletions, or substitutions) required to transform one into the other. In this case, it would be 1 (substitution of “e” with “h”).

Applications in Error Detection and Spell Checking

Levenshtein distance finds widespread use in:

- Spell checking: Identifying misspelled words by comparing them to a dictionary.

- Error detection: Detecting errors in data transmission or storage systems.

- Bioinformatics: Comparing DNA and protein sequences for mutations or evolutionary relationships.

Example

Let’s consider the strings “distance” and “distances.” The Levenshtein distance between them is 1, as only one character (the “s”) needs to be added to transform “distance” into “distances.”

Levenshtein distance is an invaluable tool for detecting errors and measuring similarity in strings. Its versatility makes it applicable in diverse fields, ensuring accuracy and precision in data processing. By understanding the Levenshtein distance, we can unlock a powerful technique for ensuring the integrity of our data.

Hamming Distance: Unveiling the Closeness of Binary Strings

In the realm of digital communications, transmitting information accurately is paramount. Every bit of data, represented as a 0 or 1 in binary strings, carries crucial information. To ensure its integrity, we must find ways to detect and correct transmission errors. This is where Hamming distance comes into play.

Defining the Hamming Distance

Imagine we have two binary strings: “101010” and “101110.” Hamming distance measures the disparity between these strings by counting the number of positions where the bits differ. In our example, the Hamming distance is 1 because only one bit (the third one) differs.

Calculating Hamming Distance

Calculating the Hamming distance is straightforward. Simply align the strings and count the number of mismatched bits. For instance, the Hamming distance between “1001” and “1011” is 2, as two bits (the second and fourth) are different.

Applications in Error Detection

The Hamming distance finds its primary application in error detection. In digital communications, errors can arise due to noise or interference. By calculating the Hamming distance between the received and original strings, we can identify any bit errors.

Example: Let’s say we send the string “101111” but receive “101011.” The Hamming distance is 2, indicating that two bits have flipped during transmission.

Use in Digital Communication Systems

Hamming distance also plays a crucial role in digital communication systems, such as modems and routers. By adding redundant bits to the transmitted data, these systems can identify and correct errors. The sender adds a fixed number of parity bits to the end of the string, creating an extended Hamming code.

Hamming distance is an essential metric for ensuring the reliability of digital communications. By measuring the number of bit differences between strings, we can identify and correct transmission errors, making our data transmission secure and accurate.

Distance Metrics: A Complementary Perspective

In the realm of data analysis and comparison, we often encounter the need to quantify the dissimilarity between objects. This is where distance metrics come into play, offering a complementary perspective to similarity metrics.

Distance metrics, in contrast to similarity metrics, provide a measure of how different objects are. They assign smaller values to more similar objects and larger values to more dissimilar objects. This distinction allows distance metrics to excel in tasks where it’s crucial to identify and quantify differences.

The relationship between similarity metrics and distance metrics is inverse. A high similarity value typically corresponds to a low distance value, indicating a high degree of similarity. Conversely, a low similarity value corresponds to a high distance value, indicating a high degree of dissimilarity.

Example

Consider two documents, Document A and Document B. A similarity metric may calculate their cosine similarity as 0.8, suggesting that they are quite similar. However, a distance metric, such as the Euclidean distance, may calculate the distance between the two documents as 0.5, indicating that they are moderately dissimilar.

Common Distance Metrics

There are several commonly used distance metrics, each tailored to specific types of data. Some popular distance metrics include:

- Euclidean Distance: Measures the straight-line distance between two points in a vector space.

- Manhattan Distance: Sums the absolute differences between the coordinates of two points.

- Minkowski Distance: Generalizes the Euclidean and Manhattan distances.

- Jaccard Distance: Calculates the distance between sets by dividing the number of non-intersecting elements by the total number of elements.

Choosing the Right Distance Metric

Selecting the appropriate distance metric is crucial for accurate and meaningful results. Factors to consider include:

- Type of data: Some distance metrics are designed specifically for numerical data, while others are suitable for categorical data or text.

- Task objective: The goal of the analysis will determine the type of distance metric needed. For example, error detection may require a distance metric that emphasizes accuracy, while clustering may prioritize efficiency.

Distance metrics provide a complementary perspective to similarity metrics by quantifying dissimilarity. Understanding the relationship between these metrics is essential for choosing the most suitable metric for a given task. By leveraging distance metrics, we gain a deeper insight into the differences between objects, enabling more precise and nuanced data analysis.

Choosing the Right Similarity Metric

When it comes to similarity measurement, selecting the right metric is crucial for obtaining meaningful results. Just as the right tool for the job ensures success, choosing the appropriate similarity metric guarantees accurate and effective analysis.

Different data types and task objectives demand specific similarity metrics. For instance, if you want to compare documents, cosine similarity is ideal due to its ability to quantify similarity between vectors. Jaccard similarity excels in measuring set similarity and text overlap.

In cases where detecting errors in strings is the goal, Levenshtein distance takes the cake. Its capability to quantify the similarity between strings is unparalleled. Similarly, Hamming distance shines when comparing binary strings. Its accuracy in measuring the difference between binary codes makes it indispensable for applications like error detection and digital communications.

To ensure you choose the right similarity metric, consider the following guidelines:

- Data Type: Identify the type of data you’re working with. Whether it’s text, vectors, sets, or binary strings, each has its recommended similarity metrics.

- Task Objective: Determine your analysis goal. Different tasks call for different metrics. For instance, classification requires metrics that capture similarity well, while recommendation systems rely on metrics that emphasize relevance.

By carefully considering these factors, you can select the optimal similarity metric for your specific application. Remember, the right metric is the key to unlocking valuable insights and driving successful outcomes.