Mastering Measurements: A Comprehensive Guide To Accuracy, Precision, And Clarity

To write out measurements, begin by understanding measurement concepts, including the International System of Units (SI) and prefixes. Interpret significant figures to represent precision, considering accuracy and error. Manage measurement uncertainty through standard deviation and confidence intervals. Ensure accuracy and precision by reducing bias, promoting consistency, and understanding variability. Express measurements clearly using proper units, scientific notation, or engineering notation.

Measurement: Understanding the Core Concepts

In the realm of science and engineering, measurements play a pivotal role in unraveling the mysteries of the natural world and shaping our technological advancements. But what exactly is measurement? Let’s dive in and explore the essence of this fundamental concept.

Measurement involves quantifying a property of an object or event using a defined standard. It provides a numerical representation of a physical attribute, enabling us to compare and interpret data objectively. In contrast to estimation, which relies on approximation and judgment, measurement employs standardized units and precise instruments to ensure accuracy and consistency.

The International System of Units (SI), established by the International Bureau of Weights and Measures (BIPM), serves as the global standard for measurement. SI comprises seven base units: the meter (length), kilogram (mass), second (time), ampere (electric current), kelvin (temperature), mole (amount of substance), and candela (luminous intensity). These base units are the foundation upon which derived units are built, representing quantities such as force, energy, and velocity.

Delving into the International System of Units: A Standardized Compass for Measurement

In the realm of science, measurement reigns as the cornerstone of knowledge acquisition. It’s not just about making guesses (estimation); it’s about assigning precise numbers to the world around us, allowing us to quantify our observations and compare them objectively. To ensure uniformity in this measurement process, scientists have devised a universal language: the International System of Units (SI).

The SI is a system of base units, which are fundamental and unchangeable, and derived units, which are derived from the base units through mathematical operations. The seven base units of the SI are:

- Meter (m): Length

- Kilogram (kg): Mass

- Second (s): Time

- Ampere (A): Electric current

- Kelvin (K): Temperature

- Mole (mol): Amount of substance

- Candela (cd): Luminous intensity

From these base units, a vast array of derived units are created using prefixes. These prefixes, such as kilo (1000),** milli** (1/1000), and nano (1/1,000,000,000), allow us to represent numbers with ease and precision. For example, a kilometer (km) is simply 1000 meters, and a millisecond (ms) is 1/1000 of a second.

The SI serves as a common ground for scientists worldwide, ensuring that measurements from different labs and countries can be directly compared and analyzed. It’s a testament to the power of standardization and the pursuit of objective understanding.

The Magic of Prefixes: Simplifying Scientific Notation

In the vast world of scientific research, where numbers can soar to astronomical heights or plummet to microscopic depths, the language of measurement is paramount. Scientific notation, a tool that compresses these extreme values into manageable expressions, becomes increasingly indispensable. But within this notation lies a secret weapon: prefixes.

These prefixes, like magical incantations, transform unwieldy numbers into comprehensible values. Take the example of the distance to the nearest star, Proxima Centauri. At a daunting 40,208,000,000,000 kilometers away, it’s almost impossible to grasp its immensity. But when we invoke the power of the prefix “tera” (T), it becomes a more digestible 40.2 Tkm.

Why Prefixes Matter

The magic of prefixes lies in their ability to scale numbers while maintaining precision. The International System of Units (SI) has established a standard set of prefixes, each representing a specific power of ten. This allows us to express values in a clear and concise manner, facilitating our understanding of scientific data.

A Tale of Prefixes

Let’s delve into the enchanting world of prefixes and their practical applications.

- Femto (f): Shrinking numbers to the realm of the incredibly small. For instance, the wavelength of ultraviolet light can be expressed as 100 fm (10^-15 meters).

- Nano (n): Bridging the gap between the microscopic and the macroscopic. The thickness of a human hair is approximately 100 nm (10^-9 meters).

- Micro (µ): Diving into the world of microbiology. The diameter of a typical bacterial cell is around 1 µm (10^-6 meters).

- Kilo (k): Expanding our horizons into larger scales. The mass of the Earth is expressed as 5.972 × 10^24 kg (1,000 kilograms).

- Mega (M): Ascending to the realm of the colossal. The distance from the Earth to the Moon is approximately 384,400 Mm (1,000,000 meters).

By mastering the art of prefixes, we unlock a treasure trove of understanding in the enigmatic world of scientific measurement. Their simplifying power and standardized usage empower us to communicate complex ideas with clarity and precision.

Understanding the Significance of Significant Figures

In the realm of science and measurement, significant figures play a crucial role in representing the precision of our observations. These are the digits in a measurement that are known with certainty, plus one additional digit that is estimated. By understanding and using significant figures correctly, we can ensure that our measurements accurately reflect the accuracy of our instruments and the nature of the observed phenomenon.

Significant figures serve as a shorthand notation for expressing the reliability of a measurement. They indicate the number of digits that are considered trustworthy and the magnitude of uncertainty associated with the measurement. For instance, if we measure the length of an object as 12.30 cm, the three digits (1, 2, and 3) are significant, and the zero at the end is uncertain. This implies that the length is likely to be closer to 12.3 cm than 12.2 cm or 12.4 cm.

It’s important to note that significant figures are not the same as decimal places. Decimal places simply refer to the number of digits after the decimal point, regardless of whether they are known with certainty or not. For example, in the measurement 0.0012, there are three decimal places, but only two significant figures (1 and 2).

Accuracy vs. Precision: Nail the Science of Measurement

Imagine you’re a target shooter aiming for a bullseye. Your accuracy measures how close your shots come to the center, while your precision tells you how consistently you can hit the same spot.

Accuracy refers to the closeness of a measurement to the true value. Like a sharpshooter hitting the bullseye, a highly accurate measurement is spot-on. Precision, on the other hand, tells us how consistently the same measurement is repeated. Even if your shots are all clustered together, but not near the bullseye, they are precise but not accurate.

Significant figures play a crucial role in both accuracy and precision. They represent the number of meaningful digits in a measurement, telling us the level of certainty we can have in the result. For example, a measurement of 2.00 m has three significant figures, indicating that it is accurate to the nearest centimeter.

The more significant figures a measurement has, the more precise it is. This is because it means the measurement was made with a finer instrument or technique. But even a precise measurement may not be accurate if the instrument itself is biased or the technique is flawed.

Understanding the difference between accuracy and precision is essential for interpreting scientific data. By considering both factors, we can make better judgments about the reliability and significance of our measurements.

The Unforgiving Truth: Errors Lurk in Your Measurements

In the realm of science and engineering, measurements are like the compass that guides our understanding of the world. But it’s not always smooth sailing. Errors, like uninvited guests, can creep into our measurements, threatening their accuracy and precision.

Absolute Error: The absolute error is the difference between the measured value and the true value. It tells us how far off our measurement is, regardless of its sign.

Percent Error: For a more relative perspective, we can calculate the percent error. It’s like giving the absolute error a percentage makeover. By dividing the absolute error by the true value and multiplying by 100, we express the error as a convenient percentage.

These error calculations are essential for evaluating the reliability of our measurements. If the absolute or percent error is too large, we need to re-examine our measurement techniques or the instruments we’re using.

Understanding the Significance of Standard Deviation in Data Analysis

In the realm of measurement, understanding the variability of data is crucial. Enter standard deviation, a statistical measure that quantifies the spread of data points around their average value.

Imagine a group of students taking a test. Their scores may vary, with some scoring higher and some lower than the average. Standard deviation tells us how much the scores deviate from this average. A high standard deviation indicates a wide spread of scores, while a low standard deviation suggests that the scores are clustered more closely around the mean.

Standard deviation is a critical tool for data analysis because it provides insights into the reliability and variability of measurements. In scientific experiments, a low standard deviation indicates that the measurements are consistent and reproducible. Conversely, a high standard deviation suggests that the data may be unreliable or influenced by external factors.

Moreover, standard deviation plays a crucial role in estimating the uncertainty of measurements. By calculating the standard deviation of a series of measurements, we can determine the confidence interval, which represents the range of values within which the true value is likely to fall. This interval helps us assess the reliability of our conclusions and make informed decisions based on the data.

Understanding Measurement Uncertainty: The Role of Confidence Intervals

In the realm of science, measurements play a pivotal role in unraveling the secrets of our world. Yet, these measurements are not always absolute truths. They come with an inherent degree of uncertainty that we must acknowledge and manage.

One powerful tool for quantifying this uncertainty is the confidence interval. A confidence interval is a range of values that, with a certain level of confidence, is likely to contain the true value of the measurement. It’s like a safety net that helps us delineate the realm of possibilities around our observations.

To understand confidence intervals, let’s imagine you’re measuring the height of a tree. You might take multiple measurements and get slightly different values each time. This variation is due to error, which is an unavoidable part of any measurement process.

To account for this error, we use statistics to define a range of values within which we can be confident that the true height of the tree lies. This range is our confidence interval. It’s not a guarantee, but a probabilistic statement that helps us make informed judgments about the accuracy of our measurement.

The width of the confidence interval represents the level of uncertainty. A wider interval indicates a greater degree of uncertainty, while a narrower interval suggests a more precise measurement. The level of confidence determines the probability that the true value falls within the interval. In science, we typically use a 95% confidence level, which means that we’re 95% sure that the true value is within the interval.

By understanding and interpreting confidence intervals, we can make more informed decisions about the accuracy of our measurements and draw conclusions that are both reliable and representative of the true state of the world.

Exploring the Margin of Error: Uncovering Uncertainty in Measurements

In the realm of scientific inquiry, precision and accuracy are paramount. When conducting measurements, we often encounter uncertainties that can potentially skew our results. Enter the margin of error, a statistical concept that serves as a guiding light, helping us express the level of uncertainty associated with our measurements.

The margin of error is essentially a range within which the true value of the measurement is likely to fall. It provides scientists with a confidence interval, allowing them to account for potential inaccuracies that may arise during the measurement process.

Calculating the margin of error involves determining the standard deviation of the data, which measures the spread of the data points around the mean. A smaller standard deviation indicates a higher level of precision, while a larger standard deviation suggests greater scatter in the data.

Once the standard deviation is known, it can be multiplied by a confidence factor to obtain the margin of error. The confidence factor is typically based on the desired level of confidence, with 95% confidence being commonly used in scientific applications.

By incorporating the margin of error into our measurements, we acknowledge the inherent limitations of our instruments and methodologies. It allows us to quantify the uncertainty and provide a more informed interpretation of our results.

In real-world applications, the margin of error plays a critical role. For instance, in medicine, it helps healthcare professionals interpret diagnostic test results and make informed decisions about patient care. In engineering, it aids in designing and constructing structures that can withstand potential uncertainties in material properties and environmental conditions.

By embracing the margin of error, we gain a deeper understanding of the precision and accuracy of our measurements. It empowers us to make informed inferences, communicate our findings with greater clarity, and ultimately advance our knowledge and understanding of the world around us.

Identifying Bias and Calibration for Accurate Measurements

In the realm of scientific inquiry, the pursuit of accurate and precise measurements is paramount. However, lurking within our methods lies a formidable foe: bias. Bias can subtly distort our measurements, leading to inaccurate conclusions. Identifying and minimizing bias is therefore essential for ensuring the integrity of our scientific endeavors.

One common source of bias stems from instrumental limitations. Measuring devices may possess inherent biases due to factors such as design flaws, aging, or environmental conditions. To counteract this, regular calibration against reference standards is crucial. Calibration involves comparing the instrument’s readings with a known, accurate source, thereby identifying any deviations and applying necessary corrections.

Another source of bias can arise from human error. Our own perceptions and preconceptions can influence the way we interpret data. To mitigate this, blind trials and double-checking can be employed. In a blind trial, the experimenter is unaware of the specific treatment or condition being tested, reducing the risk of conscious or unconscious bias. Double-checking involves having multiple observers independently record measurements, comparing their results to identify and eliminate any discrepancies.

Environmental factors can also introduce bias if not carefully controlled. Temperature fluctuations, vibration, or electromagnetic interference can affect the accuracy of measurements. By establishing a controlled laboratory environment and using appropriate shielding or damping techniques, we can minimize these external influences.

In conclusion, identifying and minimizing bias are crucial for ensuring accurate measurements. By employing rigorous calibration procedures, implementing blind trials and double-checking, and controlling environmental factors, we can mitigate the impact of bias and obtain reliable data that can lead to sound scientific conclusions.

Repeatability and Reproducibility: Bedrocks of Measurement Consistency

Precise and accurate measurements are crucial for meaningful scientific and engineering endeavors. To ensure consistency in measurements, scientists and engineers rely on the principles of repeatability and reproducibility.

Repeatability refers to the ability to obtain the same measurement result when repeating the measurement under the same conditions, using the same measuring instrument and operator. This implies that the measurement process is free from random errors or fluctuations. It establishes a baseline for assessing the reliability of the measurement technique.

In contrast, reproducibility assesses the consistency of measurement results obtained by different observers or laboratories using different instruments. It ensures that the measurement procedure is robust and independent of the specific equipment or operator involved. Reproducibility is essential for ensuring that measurements are comparable across studies and laboratories, fostering collaboration and knowledge sharing.

Achieving both repeatability and reproducibility requires meticulous attention to detail throughout the measurement process. Calibration of instruments, employing standardized measurement protocols, and minimizing human error are critical steps towards ensuring consistent and reliable results. By embracing these principles, scientists and engineers can establish a solid foundation for accurate and trustworthy measurements, enabling advancements in various fields of science and technology.

Describe the concept of variability and its impact on precision.

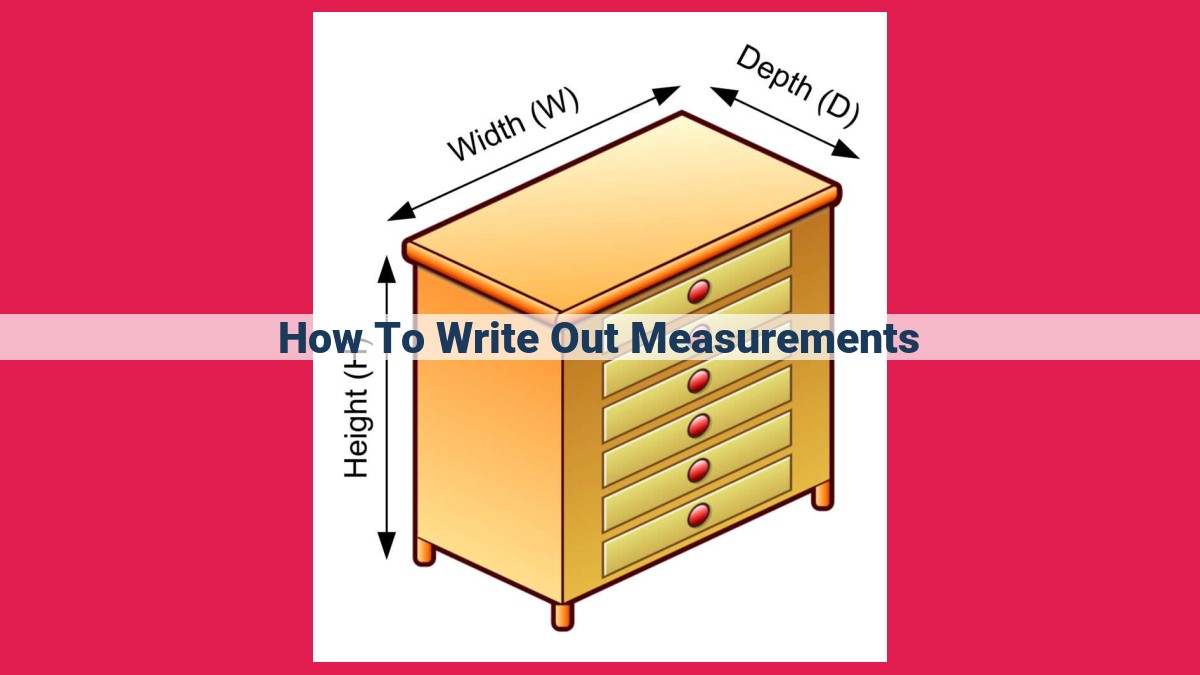

5. Expressing Measurements Clearly and Concisely

Rounding off measurements to an appropriate number of significant figures conveys a clear understanding of the precision of the measurement. Variability is a crucial concept that influences precision.

Variability and Precision

Variability refers to the extent to which repeated measurements of the same quantity produce slightly different results. If measurements exhibit high variability, it means their precision is lower.

Impact on Precision

When measurements have high variability, it suggests that the uncertainty associated with the measurement is greater. This uncertainty reduces the precision, indicating a lower level of confidence in the measurement. Conversely, measurements with low variability possess higher precision, indicating a higher level of confidence in their accuracy.

Example

Consider measuring the height of a person using a measuring tape. If one measurement results in 1.72 meters, and a repeated measurement yields 1.74 meters, the variability is 0.02 meters. This variability diminishes the precision of the measurement, as the true height may lie within a range of values around the reported 1.73-meter average.

Understanding variability is essential in assessing the precision of measurements. High variability leads to lower precision, while low variability indicates higher precision. By considering variability, scientists and researchers can express their measurements accurately and confidently, ensuring clear and concise communication of their findings.

Emphasize the importance of using proper units when presenting measurements.

The Importance of Proper Units in Measurement: A Story of Precision

In the realm of science, accuracy and precision are paramount. Imagine yourself as a culinary apprentice tasked with baking a cake. Your recipe calls for a precise amount of flour, sugar, and baking powder. If you measure these ingredients haphazardly, using a ruler instead of a measuring cup, your cake will likely turn out a disaster.

The same principle applies to scientific measurements. Using proper units ensures that your measurements are **accurate (close to the true value) and precise (consistent and reproducible).**

Why Units Matter

Units provide a common reference point for comparing and interpreting measurements. For example, if you measure the length of a table in inches, you can easily compare it to a table measured in centimeters because they both represent the same physical quantity.

Choosing the Right Units

The choice of units depends on the context of your measurement. In the scientific community, the International System of Units (SI) is the standard. SI units include meters for length, kilograms for mass, and seconds for time.

Prefixes for Convenience

When working with very large or small numbers, we can use prefixes to represent them more conveniently. For instance, instead of writing 0.000001 meters, we can write 1 micrometer.

Proper units are the bedrock of accurate and precise measurements. By using them consistently, we can effectively communicate scientific findings and make informed decisions based on reliable data. Just as precise measurements are essential for a successful cake, they are equally crucial in the world of science and engineering.

The Power of Scientific Notation: Unlocking the Secrets of Large and Small Numbers

In the vast tapestry of the cosmos, we encounter numbers that soar to astronomical heights and plummet to the depths of the infinitely small. Navigating this numerical landscape requires a tool that can tame these unwieldy values: scientific notation. This mathematical marvel allows us to represent extremely large or extremely small numbers in a concise and manageable way.

Consider the colossal number of stars in our galaxy, the Milky Way: 100,000,000,000,000. Writing out this number in full would be an exercise in frustration. Scientific notation comes to the rescue, transforming this behemoth into a nimble 1.0 x 10¹¹ (10 to the power of 11).

Conversely, imagine the minuscule mass of an electron: 0.00000000000000000000000000000091093837015 kg. In scientific notation, this whisper of a number becomes 9.1093837015 x 10⁻³¹ kg (9.1093837015 times 10 to the power of -31).

The Mechanics of Scientific Notation

Scientific notation is built on a simple yet elegant foundation. It consists of two components: a coefficient (a number between 1 and 10) and a base (usually 10) raised to a power. For example, 6.022 x 10²³ represents the number 602,200,000,000,000,000,000,000.

When to Use Scientific Notation

Whenever you encounter numbers that are too large or too small to write out comfortably, scientific notation is your go-to solution. It’s particularly useful in the fields of science, engineering, and astronomy, where mind-bogglingly large and small numbers are commonplace.

Advantages of Scientific Notation

- Compactness: Simplifies complex numbers, making them easier to write, read, and manipulate.

- Accuracy: Preserves the precision of measurements by representing all significant figures.

- Comparison: Facilitates comparisons between vastly different magnitudes by standardizing their scale.

Embrace the power of scientific notation, and unlock the secrets of the numerical universe. From exploring the boundless wonders of outer space to unraveling the intricacies of subatomic particles, this mathematical instrument will empower you to navigate the realm of large and small numbers with confidence and clarity.

Exploring the Precision and Uncertainty in Measurements

In the realm of science and engineering, accuracy and precision are paramount. Understanding measurement concepts, interpreting significant figures, and managing uncertainty are crucial skills that ensure the reliability of our data.

1. Measurement Concepts

Measurement involves quantifying a physical quantity against a defined standard. The International System of Units (SI) provides a standardized framework for measurements, with base units like the meter for length and the kilogram for mass. Scientific notation, using prefixes such as “kilo-” or “milli-“, allows us to represent large or small numbers conveniently.

2. Significant Figures

Significant figures indicate the precision of a measurement. They represent the digits that are known with certainty, plus one estimated digit. Accuracy refers to how close a measurement is to the true value, while precision represents the level of reproducibility or consistency of measurements. Errors in measurements lead to absolute or percent error.

3. Measurement Uncertainty

Standard deviation measures data dispersion, providing an estimate of measurement uncertainty. Confidence intervals capture the range of possible values with a certain level of confidence. Margin of error expresses the uncertainty associated with a measurement, helping us assess its reliability.

4. Accuracy and Precision in Measurements

Bias can introduce systematic errors, which can be minimized through calibration. Repeatability and reproducibility ensure consistency in measurements, even when performed by different individuals or using different equipment. Variability affects precision and must be considered when evaluating measurement results.

5. Expressing Measurements Clearly

Proper units are essential for clear and unambiguous communication of measurements. Scientific notation condenses large or small numbers, while engineering notation offers an alternative using base-10 prefixes like “kilo” or “mega,” making numbers more user-friendly in certain applications.

Understanding measurement concepts, interpreting significant figures, managing uncertainty, and ensuring accuracy and precision are fundamental principles for scientists and engineers. These skills allow us to make reliable measurements, draw accurate conclusions, and communicate our findings effectively. By mastering these principles, we can enhance the quality and credibility of our scientific endeavors.