Mastering Eigenspaces: A Comprehensive Guide To Basis Calculations

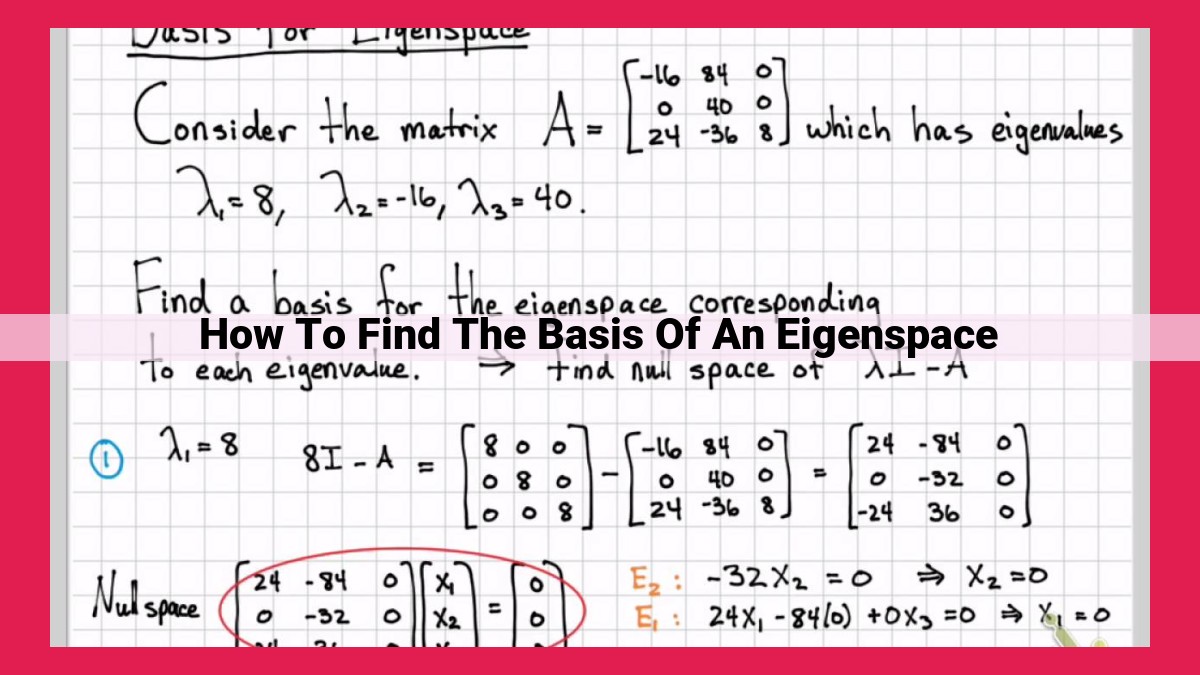

To find the basis of an eigenspace:

- Calculate the eigenvalues of the matrix.

- For each eigenvalue, solve the system of equations (Ax – λx = 0) to find the corresponding eigenvectors.

- Check if the eigenvectors are linearly independent using determinant or row reduction.

- If linearly independent, the eigenvectors form the basis for the eigenspace associated with the eigenvalue. Otherwise, use the Gram-Schmidt process to find a linearly independent basis.

Understanding Eigenvalues, Eigenvectors, and Eigenspace

In the realm of linear algebra, eigenvalues, eigenvectors, and eigenspace are fundamental concepts that provide valuable insights into the behavior of matrices. Let’s unravel these enigmatic terms and explore their significance.

An eigenvalue is a special scalar value that, when plugged into a matrix, produces a vector that remains in the same direction, only scaled by the eigenvalue. This vector is known as an eigenvector. The eigenspace is the subspace of all eigenvectors associated with a particular eigenvalue.

Essentially, eigenvalues and eigenvectors reveal the hidden structure of a matrix. Eigenvalues represent the strengths of the transformations represented by the matrix, while eigenvectors indicate the directions along which those transformations act. By understanding these components, we can gain a deeper comprehension of the matrix’s behavior.

Finding Eigenvalues: A Step-by-Step Guide

In the realm of linear algebra, eigenvalues are as enigmatic and profound as hidden treasures. To uncover these elusive values, we embark on a journey to solve the characteristic equation, a mathematical riddle that holds the key to their whereabouts.

Step 1: The Characteristic Equation

The characteristic equation of a square matrix A is a polynomial equation defined as:

det(A - λI) = 0

where det represents the determinant, λ is the eigenvalue we seek, and I is the identity matrix. This equation effectively asks: “What values of λ make A lose its invertibility?”

Step 2: Solving the Polynomial

Solving the characteristic equation is akin to embarking on a detective hunt. We meticulously factor the polynomial, searching for its roots. Each root corresponds to an eigenvalue.

Suppose we encounter a factor (λ-α). This means that α is an eigenvalue because at λ=α, the determinant vanishes. By repeating this process for all factors, we uncover the complete set of eigenvalues.

Step 3: Multiple Eigenvalues and Algebraic Multiplicity

It is not uncommon for eigenvalues to occur multiple times. The number of times an eigenvalue appears in the characteristic equation is known as its algebraic multiplicity. This multiplicity plays a crucial role in determining the dimensionality of the associated eigenspace.

Example:

Consider the matrix A = [[2, 1], [-1, 2]]. Its characteristic equation is:

det(A - λI) = det([[2-λ, 1],[-1, 2-λ]]) = (3-λ)²

In this case, the eigenvalue 3 has algebraic multiplicity 2.

Determining the Eigenvectors: Unveiling Hidden Connections in Matrices

Once we’ve uncovered the eigenvalues that define a matrix’s unique characteristics, it’s time to dive into the world of eigenvectors, which hold the key to understanding the matrix’s hidden structural patterns. Eigenvectors are vectors that, when multiplied by the matrix, simply scale themselves by the corresponding eigenvalue.

To determine these influential vectors, we embark on a mathematical adventure:

-

Set Up the System: For each eigenvalue, we construct a system of equations by subtracting that eigenvalue from the matrix’s diagonal elements and setting the result equal to zero. Each column of the resulting matrix is a potential eigenvector candidate.

-

Solve for Variables: With our system of equations in place, we use a method like Gaussian elimination or row reduction to solve for the variables that define the eigenvector.

-

Check for Linear Independence: Once we have a candidate eigenvector, we need to verify that it’s linearly independent from any other eigenvectors associated with the same eigenvalue. We use methods like calculating the determinant or performing row reduction to confirm their independence.

-

Choose a Basis: If our eigenvectors are linearly independent, they form a basis for the eigenspace, which is the subspace of vectors that are scaled by the specific eigenvalue. However, if they’re linearly dependent, we employ the Gram-Schmidt process to orthogonalize them and create a basis.

By unraveling the eigenvectors, we gain invaluable insights into the matrix’s behavior. They provide a framework for understanding how linear transformations affect vectors and reveal the matrix’s intrinsic structure.

Determining Linear Independence: Unraveling the Secrets of Eigenvectors

In the captivating world of linear algebra, eigenvectors and eigenvalues play a pivotal role in understanding the behavior of matrices. But before delving deeper into their significance, we must first establish whether the eigenvectors we’ve found are linearly independent—a crucial property that ensures they form a robust basis for the eigenspace.

Unveiling Linear Independence

Linear independence, at its core, means that none of the eigenvectors can be expressed as a linear combination of the others. In other words, they all provide unique and non-redundant information about the matrix.

Method 1: The Determinant Dance

One way to determine linear independence is by calculating the determinant of the matrix formed by the eigenvectors as columns. If the determinant is non-zero, the eigenvectors are linearly independent. This is because a zero determinant indicates that the columns (eigenvectors) are linearly dependent, meaning one of them can be expressed as a combination of the others.

Method 2: Row Reduction Rhapsody

Another approach involves using row reduction to transform the matrix of eigenvectors into row echelon form. If the row echelon form has no rows of zeros, the eigenvectors are linearly independent. This is because row reduction essentially simplifies the matrix into its most basic form, and if any eigenvectors were linearly dependent, they would reduce to a row of zeros.

Building the Basis

Once we confirm the linear independence of the eigenvectors, we can confidently select them as a_ **basis_ for the eigenspace**. This means that any vector in the eigenspace can be expressed as a linear combination of these eigenvectors. The basis vectors provide a complete and non-redundant representation of the eigenspace.

Understanding linear independence is essential in the study of eigenvalues and eigenvectors. By determining whether the eigenvectors are linearly independent, we ensure that they provide a robust and reliable basis for the eigenspace. This understanding forms the foundation for further explorations into the fascinating world of linear algebra.

Selecting a Basis for the Eigenspace

Once you have determined the eigenvectors of a matrix, it’s time to select a basis for the eigenspace associated with each eigenvalue.

If the eigenvectors are linearly independent, they can be used as the basis for the eigenspace. This means that the eigenvectors can be combined in different ways to create any vector in the eigenspace.

However, if the eigenvectors are not linearly independent, you will need to use the Gram-Schmidt process to construct an orthogonal basis for the eigenspace. The Gram-Schmidt process systematically removes any linear dependence between the eigenvectors, resulting in a set of orthonormal vectors that span the eigenspace.

To summarize, the choice of basis for the eigenspace depends on the linear independence of the eigenvectors. If they are linearly independent, they can serve as the basis. Otherwise, the Gram-Schmidt process is used to create an orthogonal basis.

Related Concepts

- Explanation of matrix diagonalization, linear independence, and the span of a vector space.

Understanding Eigenvalues, Eigenvectors, and Eigenspace: A Beginner’s Guide

In the realm of linear algebra, eigenvalues and eigenvectors are fundamental concepts that provide valuable insights into the behavior of matrices. They play a crucial role in various scientific and engineering applications, such as stability analysis, vibration analysis, and quantum mechanics.

Eigenvalues: The Essence of Linear Transformations

Imagine a linear transformation, a mathematical operation that rescales and rotates vectors in a certain way. The eigenvalues of the matrix representing this transformation reveal the scaling factors that are applied to the vectors. Each eigenvalue corresponds to a specific direction along which the transformation stretches or shrinks vectors.

Eigenvectors: Travelling in Eigenspaces

For each eigenvalue, there exists at least one eigenvector, a non-zero vector that remains parallel to itself under the transformation. The set of all eigenvectors corresponding to a specific eigenvalue forms an eigenspace, a subspace of the original vector space. Eigenvalues and eigenvectors are essential for understanding the dynamics of linear transformations and the behavior of the system they represent.

Seeking Eigenvalues: A Puzzle to Solve

To find the eigenvalues of a matrix, we solve its characteristic equation, a polynomial equation that involves the matrix and an unknown variable (the eigenvalue). The roots of this equation yield the eigenvalues.

Unveiling Eigenvectors: A System to Conquer

Once we have the eigenvalues, we determine the eigenvectors by solving systems of linear equations. Each eigenvalue gives rise to a system that yields the corresponding eigenvectors, which span the respective eigenspace.

Linear Independence: A Critical Check

Not all eigenvectors are created equal. We need to verify if the eigenvectors are linearly independent, meaning they cannot be generated by a linear combination of any smaller set. Linear independence ensures the uniqueness of the eigenvectors and the usefulness of the eigenspace as a basis for the original vector space.

Matrix Diagonalization: The Ultimate Transformation

In some cases, a matrix can be diagonalized, meaning it can be transformed into a diagonal matrix where the eigenvalues appear on the diagonal. This transformation reveals the inherent structure of the matrix and allows for efficient computations.

Span of a Vector Space: A Pathway to Understanding

The span of a vector space is the set of all possible linear combinations of the vectors in that space. Eigenvectors commonly span the subspaces of the vector space, providing a basis for representing other vectors and understanding the geometry of the space.

Eigenvalues, eigenvectors, and eigenspace are fundamental pillars of linear algebra. They provide critical insights into linear transformations, help us understand the behavior of systems, and play a vital role in diverse scientific and engineering applications. By grasping these concepts, we empower ourselves with a powerful tool for analyzing and solving real-world problems.