Unlock Flexibility In Matrices: Unveiling Free Variables And System Coherence

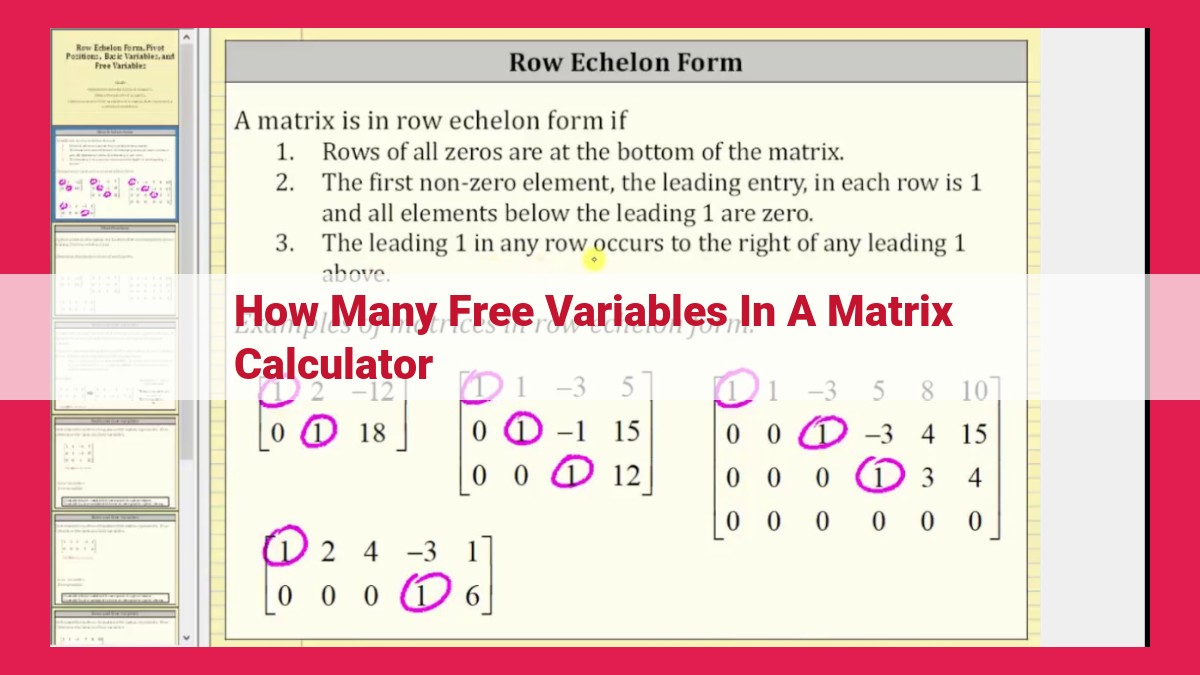

Matrices, representing data relationships, can contain free variables that determine system flexibility. The number of free variables is calculated as the number of matrix columns minus its rank, which represents independent elements. This formula provides a practical approach to understanding a matrix’s structure and the number of variables that can be adjusted independently without affecting the overall system outcome.

Matrix Muscles: Flexing and Flowing with Variables

Matrices, building blocks of data, are like a language that lets us organize and analyze information. Think of them as grids, storing numbers, patterns, and relationships. Free variables, like the athletes of this matrix gym, bring flexibility, allowing us to navigate these relationships with ease. Together, matrices and free variables offer a powerful lens to understand the world around us.

The Key: Unveiling Rank

Rank, like a treasure map, reveals the fundamental structure of a matrix. This hidden gem tells us how many linearly independent rows or columns we have. And guess what? The dimension of our row and column spaces, like secret chambers, are closely intertwined with this rank.

Dependent or Independent? A Tale of Two Variables:

Variables, like a soap opera, can have their fortunes change. Correlation whispers hints of potential connections, but causality is the drama queen that reveals the true culprit. Experimentation becomes our director, carefully choreographing the variables’ dance to determine who’s the leading star (dependent) and who’s the supporting act (independent).

Nullspace and Subspaces: Unveiling the Invisible:

Nullspace, the matrix’s hidden lair, is the set of vectors that vanish into thin air when multiplied by the matrix. It’s like a mysterious potion that reveals the inner workings of the matrix, showing us its hidden dimensions. Subspaces, like exclusive clubs, are cozy corners within the vector space, each with its own clique of vectors.

Free to be… Variables

Linear independence is the keystone that unlocks the matrix’s secrets. It’s the superpower that reveals which columns of our matrix are putting in the work. Basis vectors, like rockstar mathematicians, represent each subspace, and their number determines its gravitational pull.

The Magic Number: Free Variable Formula

Finally, we unleash the formula: Number of Columns – Rank of Matrix. This super secret code gives us the passport to the number of free variables, showing us just how flexible our system really is.

Concept of Rank: The Foundation for Understanding Variables

In the realm of matrices, a fundamental concept emerges that serves as a cornerstone for comprehending the nature of variables: the rank. The rank of a matrix unveils its hidden structure, revealing the linearly independent elements that weave together the tapestry of data.

The row rank and column rank of a matrix illuminate the dimensionality of its row space and column space, respectively. These spaces represent the span of all possible linear combinations of the matrix’s rows or columns. The higher the rank, the more linearly independent elements a matrix possesses, indicating greater structural rigidity.

Just as a building’s foundation supports its superstructure, the rank of a matrix underpins its flexibility. The dimension of the row and column spaces, determined by the rank, reveals the number of variables that can be uniquely determined within the system. A system with a high rank, implying numerous linearly independent variables, offers little wiggle room, while a system with a low rank allows for considerable freedom.

Understanding the rank of a matrix is crucial for deciphering the intricacies of variable relationships. It guides the exploration of dependent and independent variables, paving the way for meaningful analysis and informed decision-making.

Distinguishing Dependent and Independent Variables: The Essence of Relationships

In the realm of data analysis, understanding the nature of relationships between variables is crucial. Two fundamental types of variables emerge in this context: dependent and independent variables. Grasping the distinction between them is pivotal for interpreting data and drawing meaningful conclusions.

Correlation and Causality: A Delicate Balance

Variables often exhibit correlation, a mutual relationship where changes in one variable tend to be accompanied by changes in the other. However, correlation does not imply causation. Establishing a causal relationship requires experimental design, which involves manipulating one variable (independent) while controlling others to determine its direct impact on the outcome (dependent).

Unveiling the Dependent: The Variable Under Observation

Dependent variables are the variables being observed and measured in a study. They are affected by changes in the independent variable. For instance, in a scientific experiment examining the impact of fertilizer on plant growth, plant height would be the dependent variable. Its value depends on the amount of fertilizer applied.

Introducing the Independent: The Variable in Control

Independent variables are the variables manipulated or controlled in an experiment. They are the cause of change in the dependent variable. In our fertilizer experiment, the amount of fertilizer applied is the independent variable. Researchers control this variable to observe its impact on plant height.

Unveiling the Hidden Dimensions: Nullspace and Subspaces

In the realm of matrices, where data and relationships intertwine, understanding the nature of the nullspace and subspaces is crucial for unraveling the hidden dimensions that shape our world. To begin, let’s delve into the concept of the nullspace.

The nullspace, often denoted as Nul(A), is a special subspace within the vector space of all possible solutions to a matrix equation Ax = 0. Geometrically, the nullspace can be visualized as the kernel of the matrix, a subspace that captures the direction of all the linear combinations of the matrix’s columns that result in the zero vector. In other words, the nullspace represents the set of all vectors that “disappear” when multiplied by the matrix.

Moreover, the nullspace is a subspace, which simply means that it is a subset of the larger vector space that satisfies the properties of a vector space itself. The nullspace inherits the vector space properties, such as closure under addition and scalar multiplication, from the larger vector space. This makes it a distinct and well-defined entity within the broader mathematical landscape.

Linear Independence: The Key to Matrix Characterization

- Explain the concept of linear independence and its importance in understanding matrix structure.

- Introduce the idea of a basis and its role in representing a vector space.

- Discuss the dimension of subspaces and its determination by the number of basis vectors.

Linear Independence: The Key to Matrix Characterization

Imagine you’re a detective piecing together a puzzle, where each piece represents a column vector in a matrix. Linear independence is like a litmus test that tells you which pieces, or vectors, are unique and indispensable. When vectors are linearly independent, it means they can’t be created by combining multiples of other vectors in the matrix. They stand alone, providing essential information without redundancy.

In this detective game, a basis is the minimal set of linearly independent vectors that can fully describe the matrix. These “key” vectors form the foundation, guaranteeing that any other vector in the matrix can be expressed as a unique combination of these basis vectors. The dimension of the subspace spanned by these basis vectors determines how many independent directions exist within the matrix.

Just as in real-life puzzles, where you might have extra or missing pieces, matrices can have varying degrees of linear independence. The number of linearly independent vectors is equal to the matrix’s rank, which is a crucial measure of its structure and flexibility. By understanding linear independence, we unveil the inner workings of matrices, revealing their hidden dimensions and enabling us to characterize their unique identities.

Calculating the Number of Free Variables: A Practical Approach

In the realm of matrices and variables, where systems of equations dance and linear relationships unfold, we encounter an intriguing concept known as free variables. These elusive creatures represent the degrees of freedom within a system, revealing its flexibility and adaptability. Grasping the concept and its mathematical underpinnings is essential in unraveling the mysteries that matrices hold.

The formula for calculating the number of free variables is a guiding star in this exploration:

Number of Free Variables = Number of Columns – Rank of Matrix

Let’s illuminate this formula with a concrete example. Consider a matrix with 4 columns and a rank of 2. Plugging these values into our formula, we get:

Number of Free Variables = 4 – 2 = 2

The presence of two free variables indicates that our system has 2 degrees of freedom. Imagine a system of two equations with three variables:

x + y + z = 0

2x + 3y + 4z = 0

In this system, we can assign arbitrary values to two variables (let’s say y and z) without affecting the validity of the equations. The third variable (x) will then be determined by the values of y and z. The number of free variables (2) reflects the flexibility of this system, allowing us to explore different solutions without violating the constraints.

Understanding the concept of free variables is a cornerstone in the study of matrices and variables. It unveils the hidden dimensions within systems, empowering us to analyze their behavior and predict their outcomes.