Comprehensive Guide To Calculating Covariance Matrices: Sample And Population

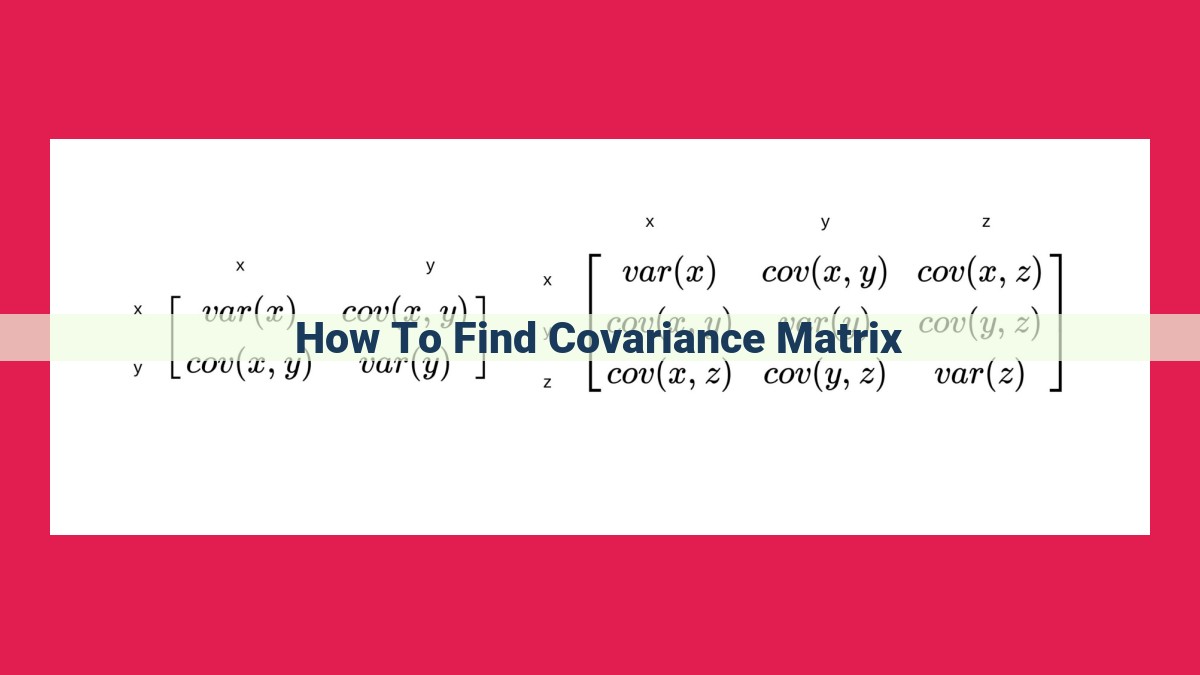

To find the covariance matrix, first calculate the mean of each variable and subtract it from each data point. Then, compute the pairwise products of these deviations and store them in a matrix. Finally, divide each element of the matrix by the number of observations minus one to obtain the sample covariance matrix. You can also calculate the population covariance matrix using similar steps, but without dividing by the number of observations minus one.

In the world of data analysis, understanding the relationships between different variables is crucial for making informed decisions. The covariance matrix, a powerful statistical tool, unveils these relationships, offering a comprehensive picture of how variables interact within a dataset. Let’s delve into the enchanting world of covariance matrices and uncover their significance in statistics.

What is a Covariance Matrix?

Think of the covariance matrix as a roadmap that guides us through the complex terrain of data. It’s a square matrix that portrays the covariances between each pair of variables in a dataset. The covariance, a numerical value, measures the joint variability of two random variables.

Significance in Statistics

The covariance matrix is an indispensable tool for statistical inference and data analysis. It’s used in various fields, including:

- Multivariate Analysis: Exploring relationships among multiple variables

- Risk Management: Assessing the risk of financial investments

- Dimensionality Reduction: Simplifying data by identifying patterns and relationships

Types of Covariance Matrices

The covariance matrix can take different forms depending on the nature of the data:

- Sample Covariance Matrix: Estimates the true covariance matrix from a random sample of data.

- Population Covariance Matrix: Represents the true covariance matrix of the entire population.

The covariance matrix empowers us to uncover the hidden relationships lurking within data. By understanding covariance and its applications, we can unlock the full potential of data analysis, enabling us to make better decisions and gain deeper insights into the world around us.

Types of Covariance Matrices

Understanding the multifaceted nature of covariance matrices is crucial for unraveling the intricate tapestry of data analysis. These matrices unveil the interdependencies and relationships between multiple variables, weaving a web of insights that illuminate the underlying patterns and structures within complex datasets.

Sample Covariance Matrix

Sample covariance matrix captures the covariance between pairs of variables within a sample of data. It provides a tangible representation of the covariations observed in the sample, mimicking the behavior of sample variances. However, it remains an imperfect reflection of the true relationships within the entire population.

Delving deeper, we can decompose the sample covariance matrix into its constituent parts, revealing its eigenvalues and eigenvectors. These hidden treasures provide a glimpse into the matrix’s internal structure, allowing us to identify the principal components that account for the majority of its variance.

Population Covariance Matrix

In contrast, the population covariance matrix paints a more profound picture, capturing the covariance between all pairs of variables within the entire population. It serves as the gold standard of covariance matrices, representing the true relationships that exist within the data-generating process.

Just like its sample counterpart, the population covariance matrix can be subjected to matrix decomposition, yielding eigenvalues and eigenvectors. These insights provide a foundation for understanding the intrinsic dimensionality of the data and the underlying relationships that shape it.

By exploring both the sample and population covariance matrices, we gain a multifaceted understanding of the dataset, empowering us to make informed decisions and draw reliable conclusions. These matrices serve as essential tools for data scientists, statisticians, and anyone seeking to unravel the mysteries hidden within complex datasets.

Covariance Formula and Calculations: Unveiling the Relationship between Variables

Understanding covariance is crucial in data analysis as it quantifies the linear dependence between two variables. Its formula offers a step-by-step approach to calculating the covariance:

-

Calculate the mean of each variable: Subtract the sum of observations divided by the number of observations from each data point.

-

Calculate the deviations from the mean: Subtract the mean from each data point.

-

Multiply the deviations of each pair of data points: Pairwise create products between the deviations of two variables.

-

Sum the products obtained in step 3: Add up all the product pairs.

-

Divide the sum by the number of observations minus one: Divide the result from step 4 by the sample size minus one.

This formula reveals a fascinating relationship with variance and expected value. Variance characterizes the spread of data points around the mean, while expected value represents the average value of a distribution. Covariance extends this concept by capturing the joint variability between two variables, measuring how they tend to move together.

Linear algebra concepts play a crucial role in understanding covariance. Covariance is a symmetric matrix with the diagonal elements representing variances and the off-diagonal elements representing covariances. Matrix operations such as eigenvalue decomposition and singular value decomposition provide valuable insights into the structure of covariance matrices, aiding in dimensionality reduction and feature extraction.

Properties of Covariance

Additivity:

Covariance is an additive measure, meaning that the covariance of the sum of two random variables (X and Y) is equal to the sum of their individual covariances with a constant. This property allows us to decompose covariance into smaller components, making it easier to analyze the relationship between multiple variables.

Scaling:

The covariance is a linear function of each variable. If we multiply one of the variables by a constant, the covariance will be multiplied by that constant. This property helps us understand the relative strength of relationships between variables.

Symmetry:

Covariance is a symmetric measure. The covariance of X and Y is equal to the covariance of Y and X. This symmetry reflects the fact that the relationship between two variables is bidirectional.

Positive Semi-Definiteness:

The covariance matrix is always positive semi-definite. This means that the eigenvalues of the covariance matrix are non-negative. This property is crucial in many statistical inference procedures, such as principal component analysis and discriminant analysis.

Correlation Matrix: Unveiling the Web of Relationships

Imagine a world where data takes center stage, and understanding the intricate connections between variables becomes paramount. In this realm, the correlation matrix emerges as a powerful tool, unraveling the tapestry of relationships that exists within your data set.

A correlation matrix is a square matrix that displays the correlation coefficients between each pair of variables in a data set. These coefficients measure the strength and direction of the linear association between variables. Positive values indicate a positive correlation, where as the value of one variable increases, the value of the other tends to increase as well. Negative values, on the other hand, signify a negative correlation, whereby an increase in one variable is associated with a decrease in the other.

The correlation coefficient, often denoted by the Greek letter rho (ρ), ranges from -1 to 1. A correlation of -1 indicates a perfect negative correlation, 0 represents no linear association, and +1 denotes a perfect positive correlation.

The interpretation of correlation coefficients can provide valuable insights into the underlying relationships within your data. For example, if you observe a high positive correlation between the price of a stock and its earnings, it suggests that the two variables move in tandem. Conversely, a strong negative correlation between temperature and rainfall implies that as temperature increases, precipitation tends to decrease.

The correlation matrix not only reveals the strength and direction of relationships but also provides a visual representation of the interconnectedness among variables. By examining the matrix, you can quickly identify clusters of highly correlated variables, which can be indicative of underlying factors or latent structures within your data.

The construction of a correlation matrix involves calculating the covariance between each pair of variables and then standardizing the covariance values to obtain the correlation coefficients. This process utilizes concepts from linear algebra and probability theory.

In essence, the correlation matrix is a versatile tool that allows you to explore and understand the relationships within your data, making it indispensable for data analysis, feature selection, and statistical modeling.

Relationship Between Covariance and Correlation

In the world of data analysis, covariance and correlation are two fundamental concepts that go hand in hand. Covariance measures the linear relationship between two variables, while correlation is a standardized measure of covariance that adjusts for the scale of the variables involved.

To understand the relationship between covariance and correlation, let’s dive into their formulas:

- Covariance: Cov(X, Y) = E[(X – μ_X)(Y – μ_Y)]

- Correlation: Corr(X, Y) = Cov(X, Y) / (σ_Xσ_Y)

where:

– E[] denotes expected value

– μ_X and μ_Y are the mean values of X and Y

– σ_X and σ_Y are the standard deviations of X and Y

Standardization of Covariance:

The key to understanding the relationship between covariance and correlation lies in the standardization step. By dividing covariance by the product of standard deviations, we obtain correlation. This process removes the influence of scale from the covariance, allowing us to compare the linear relationships between variables that may have different units or magnitudes.

Scale Invariance of Correlation:

Correlation is scale invariant, meaning that its value remains the same regardless of the units or scale of the original variables. For example, if you measure height in inches or centimeters, the correlation between height and weight will be identical. This property makes correlation a valuable tool for comparing relationships across different datasets.

Range of Values for Correlation Coefficient:

Correlation coefficients can range from -1 to 1, where:

– -1: Perfect negative correlation (variables move in opposite directions)

– 0: No correlation (variables are independent)

– 1: Perfect positive correlation (variables move in the same direction)

Understanding the relationship between covariance and correlation is essential for data analysis and interpretation. Covariance provides a measure of the linear relationship between variables, while correlation allows us to compare these relationships across different variables and scales. By mastering these concepts, you can unlock deeper insights from your data and make informed decisions based on statistical evidence.

Practical Applications of Covariance Matrix

Beyond theoretical understanding, the covariance matrix finds numerous practical applications in various fields:

Variance Decomposition and Principal Component Analysis (PCA)

Covariance plays a pivotal role in variance decomposition, which helps identify the dominant sources of variability within a dataset. This technique involves calculating the eigenvalues of the covariance matrix, which represent the variance explained by each principal component. PCA further utilizes these principal components to reduce the dimensionality of the dataset while preserving maximum information.

Risk Management and Portfolio Optimization

In the financial realm, the covariance matrix is essential for risk management and portfolio optimization. It allows investors to assess the co-movement of different assets, providing insights into their potential risks and returns. By maximizing portfolio diversification and minimizing covariance, investors can optimize their portfolios to reduce risk and enhance returns.

Feature Selection and Dimensionality Reduction

Covariance also plays a crucial role in feature selection and dimensionality reduction. In machine learning and data analysis, it helps identify highly correlated or redundant features that may not contribute significantly to model performance. By eliminating such features, algorithms can operate more efficiently and effectively, improving their accuracy and interpretability.

These practical applications demonstrate the far-reaching significance of the covariance matrix in various disciplines, from data analysis to finance and beyond. Its ability to capture the relationships between variables makes it an invaluable tool for understanding and harnessing the power of complex datasets.