Comparing Vector Distance Measures For Data Analysis And Machine Learning: A Comprehensive Guide

Measuring the distance between vectors is crucial in various fields. Euclidean distance calculates the straight-line distance, while Manhattan distance sums the absolute differences. Hamming distance counts non-matching elements, and cosine similarity measures the angle between vectors. The dot product is used to calculate the sum of products of corresponding elements. Choosing the right distance measure depends on the application, such as data analysis or machine learning. Understanding these measures enables effective data analysis and accurate results.

- Importance of measuring the distance between vectors

- Overview of vector space and distance measures

The Power of Measuring Vector Distances: Unlocking Insights in Data Analytics

In the realm of data analysis and machine learning, vectors are mathematical objects that represent multidimensional data points. Understanding the distance between vectors is crucial for extracting meaningful insights and making informed decisions. By measuring vector distances, we can discover similarities, identify patterns, and solve complex problems.

Vector Space and Distance Measures

Vectors reside in vector spaces, which are geometric structures that define rules for vector operations, including distance calculations. Distance measures quantify the separation between two vectors in a vector space. These measures provide a numerical representation of the relative closeness or difference between data points.

The choice of distance measure depends on the specific requirements of the analysis. Some common distance measures include:

- Euclidean distance: Calculates the straight-line distance between vectors.

- Manhattan distance: Sums the absolute differences along each dimension.

- Hamming distance: Counts the number of non-matching elements in binary vectors.

- Cosine similarity: Measures the angle between vectors, indicating similarity.

- Dot product: Calculates the sum of products of corresponding vector elements, related to cosine similarity and vector projection.

Euclidean Distance: Measuring the Straight-Line Path

In the realm of data analysis, measuring the distance between vectors is crucial for uncovering meaningful patterns and relationships. One of the most fundamental distance measures is the Euclidean distance, which calculates the straight-line distance between points in a vector space.

Imagine you’re lost in a vast forest, trying to navigate back to civilization. You encounter two different paths: a straight path and a winding one. Euclidean distance is like the straight path; it provides the shortest distance between your current location and the destination.

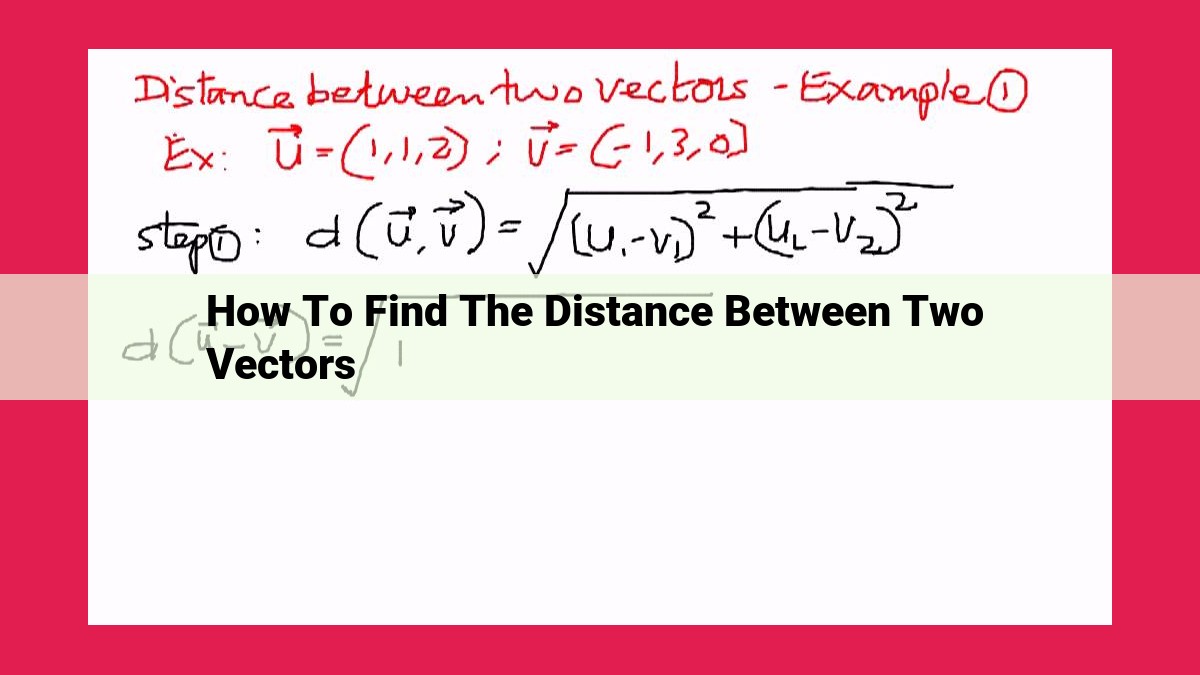

Formally, the Euclidean distance between two points (x) and (y) in an n-dimensional space is given by the formula:

$$d(x, y) = \sqrt{(x_1 – y_1)^2 + (x_2 – y_2)^2 + … + (x_n – y_n)^2}$$

where (x_i) and (y_i) are the corresponding coordinates of the points in each dimension.

Related Concepts

Euclidean distance shares a close kinship with two other distance measures:

-

Manhattan distance: Also known as city-block distance, it calculates the sum of the absolute differences along each dimension, resembling the journey you’d take on a grid-like city street.

-

Chebyshev distance: This distance measure is more conservative, taking the maximum absolute difference along any dimension. It’s like navigating around obstacles, always choosing the widest path.

Unveiling the Manhattan Distance: A Tale of City Blocks

In the bustling metropolis of data analysis, where vectors roam like skyscrapers, measuring the distance between them is crucial for navigating the urban sprawl. Among the many avenues of measuring distance, the Manhattan Distance stands out as a straightforward path, offering a unique perspective on vector geometry.

Picture a grid of city blocks, each representing a dimension of our vector space. The Manhattan Distance calculates the distance between two vectors by traversing this grid, summing the absolute difference along each dimension. As its name suggests, this path mimics the way one might travel through a Manhattan grid, navigating block by block.

Example: Consider vectors A = (2, 4) and B = (5, 1). To determine their Manhattan Distance, we calculate the absolute difference between each component: D = |5 – 2| + |1 – 4| = 3 + 3 = 6.

The Manhattan Distance shares a close bond with its Euclidean Distance cousin, which measures the straight-line distance between vectors. However, unlike Euclidean Distance, Manhattan Distance favors orthogonal (90-degree) movements, resulting in a stepped pattern rather than a smooth curve. This characteristic makes Manhattan Distance particularly useful in scenarios where movement is restricted to grid-like structures, such as chessboards or computer algorithms.

In the world of data mining and machine learning, Manhattan Distance plays a pivotal role in identifying patterns and making predictions. For image processing, it excels in comparing pixel intensities, while in text classification, it measures the similarity between strings by counting mismatched characters.

In conclusion, the Manhattan Distance serves as a valuable tool for traversing the intricate landscape of vector spaces. Its simplicity and grid-based approach make it a trusted guide for measuring distances in data-rich environments. By understanding the Manhattan Distance, data analysts and machine learning practitioners gain a deeper appreciation for the geometry of vectors and unlock new possibilities for data exploration and modeling.

Hamming Distance: Measuring the Mismatch Between Vectors

In the realm of vectors, the Hamming distance emerged as a crucial tool for quantifying the degree of dissimilarity between two vectors. Envision two sequences of characters, one written in red and the other in blue. The Hamming distance counts the number of positions where the characters differ in color.

For instance, consider the vectors “red” and “blue.” The Hamming distance between them is 3 because the first, second, and fourth characters (starting from zero) are mismatched. In essence, the Hamming distance measures the number of non-matching elements between vectors.

This concept finds extensive applications in various disciplines, including:

- Information theory: Calculating the number of bit errors in data transmission

- Error detection and correction: Identifying and correcting errors in data streams

- Pattern recognition: Identifying similar patterns by measuring the Hamming distance between input and stored patterns

Understanding the Hamming Distance

The Hamming distance is defined as the sum of the absolute differences between the corresponding elements of two vectors. In simpler terms, it counts the number of positions where the elements differ.

Formally, for two vectors a and b of equal length n, the Hamming distance is calculated as:

H(a, b) = Σ(a[i] != b[i])

where a[i] and b[i] are the elements at position i.

Applications of Hamming Distance

- DNA sequence analysis: Comparing DNA sequences to determine genetic similarities and differences

- Natural language processing: Identifying spelling errors and detecting plagiarism

- Image processing: Measuring the similarity between two images based on pixel values

Choosing the Right Distance Measure

While the Hamming distance is a versatile metric, it assumes that all elements are equally important. For scenarios where certain elements carry more weight, alternative distance measures like the Euclidean distance or Manhattan distance may be more appropriate. Understanding the nature of the data and the specific application is key to selecting the optimal distance measure.

Cosine Similarity: Measuring Vector Similarity with Angles

In the world of vectors, where data points roam, measuring their similarity becomes crucial for tasks like clustering, classification, and recommendation systems. Cosine similarity stands out as a powerful tool that calculates similarity based on the angle between two vectors.

Imagine two vectors, X and Y, as arrows in a multi-dimensional space. Cosine similarity measures the cosine of the angle formed by these arrows. The higher the cosine value (closer to 1), the more similar X and Y are. Conversely, a lower cosine value (closer to -1) indicates greater dissimilarity.

How Cosine Similarity Works:

Cosine similarity is formally defined as the dot product of X and Y divided by the product of their magnitudes:

Cosine Similarity = (X ⋅ Y) / (||X|| ||Y||)

Breaking it down:

- Dot product (X ⋅ Y): It calculates the sum of the products of corresponding elements of X and Y. Essentially, it measures the extent to which the vectors align.

- Magnitude (||X||): It represents the length of the vector. It helps normalize the similarity score, making it independent of the vectors’ length.

Applications of Cosine Similarity:

- Text similarity: Measuring the similarity between two documents to determine their relevance in search and retrieval systems.

- Image recognition: Comparing pixel values to identify similar images or detect objects in scenes.

- Recommendation systems: Grouping similar items, such as movies, products, or music, to make personalized recommendations.

- Cluster analysis: Identifying distinct groups within a dataset based on similarity measures.

- Bioinformatics: Analyzing genetic sequences to identify similarities and relationships between organisms.

Choosing the Right Similarity Metric:

Cosine similarity is not always the best choice. Other metrics, like Euclidean distance and Manhattan distance, may be more suitable for certain tasks. When selecting a similarity metric, consider factors like the data type, application, and the desired outcome.

Cosine similarity is a versatile tool for measuring vector similarity. By quantifying the angle between two vectors, it provides insights into their alignment and relatedness. Understanding cosine similarity and its applications empowers data scientists and analysts to effectively extract knowledge from complex datasets and build impactful models.

Dot Product: Unlocking the Secrets of Vector Relationships

In the realm of vector spaces, the dot product stands as a powerful tool for understanding the intricate relationships between vectors. This mathematical operation, denoted as <i style="font-style:italic;">a · b</i>, calculates the sum of the products of the corresponding elements of two vectors.

For example, consider the vectors <i style="font-style:italic;">a = (1, 2)</i> and <i style="font-style:italic;">b = (3, 4)</i>. Their dot product is:

<i style="font-style:italic;">a · b = 1 * 3 + 2 * 4 = 11</i>

The dot product provides valuable insights into vector geometry. It reveals the cosine of the angle between two vectors, making it a crucial concept in measuring vector similarity. A positive dot product indicates an acute angle, while a negative dot product signifies an obtuse angle.

Beyond measuring vector similarity, the dot product finds applications in various fields. In computer graphics, it’s used for:

- Projection: Calculating the projection of a vector onto another.

In machine learning, it’s essential for:

- Linear regression: Determining the relationship between input and output variables.

In physics, it’s used for:

- Work: Calculating the work done by a force along a displacement.

Understanding the dot product is paramount for effectively analyzing and interpreting data. It provides a powerful foundation for exploring vector relationships and unlocks a deeper understanding of the underlying mathematical concepts.

Choosing the Right Distance Measure

When analyzing data or building machine learning models, determining the appropriate distance metric can make a significant difference in the accuracy and interpretability of your results. Several factors should be considered when selecting a distance measure:

- Data Type and Representation: Different distance measures are suited to different types of data. For example, the Euclidean distance is commonly used for continuous numerical data, while the Hamming distance is appropriate for comparing binary data or strings.

- Number of Dimensions: The dimensionality of the data impacts the effectiveness of particular distance metrics. Some measures, such as the Cosine Similarity, become less informative as the number of dimensions increases.

- Normalization: If data values are on different scales, it’s crucial to normalize the data before computing distances to prevent bias towards features with larger ranges.

Examples of Applications

Distance metrics find wide applications in data analysis and machine learning:

- Clustering: Distance measures are used to group similar data points together into clusters. The Euclidean distance and Manhattan distance are common choices for clustering numerical data.

- Classification: In machine learning, distance measures are employed to determine the proximity of new data points to known class labels. The Cosine Similarity is often used for classifying text data, while the Hamming distance can be effective for classifying binary data.

- Dimensionality Reduction: Techniques like Principal Component Analysis (PCA) and t-SNE use distance measures to project high-dimensional data into lower-dimensional representations while preserving important relationships.

Understanding the strengths and limitations of different distance measures is essential for deriving meaningful insights from your data. By carefully selecting the right distance metric, you can enhance the accuracy and interpretability of your data analysis and machine learning endeavors.